The insurance industry stands at a critical crossroads. On one hand, insurance leaders are inclined towards the promise of AI in accelerating underwriting, claims processing, and policy pricing. On the other, they tend to negotiate with the non-negotiables… ethical concerns, fairness, and regulatory compliance with AI.

This is what they call the “trust paradox”. The very technology that could transform your insurance operations also poses the threat of negatively impacting customer trust. You’re caught between quickly implementing new AI use cases in an increasingly competitive market and the need to uphold ethical standards and regulatory compliance that safeguard both your customers and your business.

While nearly 90% of insurance executives prioritize AI, only one in five companies use compliance-ready AI models. The question is how to balance innovation with responsibility. Only insurers that strike the right balance will thrive in the next five years.

Table of Contents

The Ever-Expanding Role of AI in Insurance

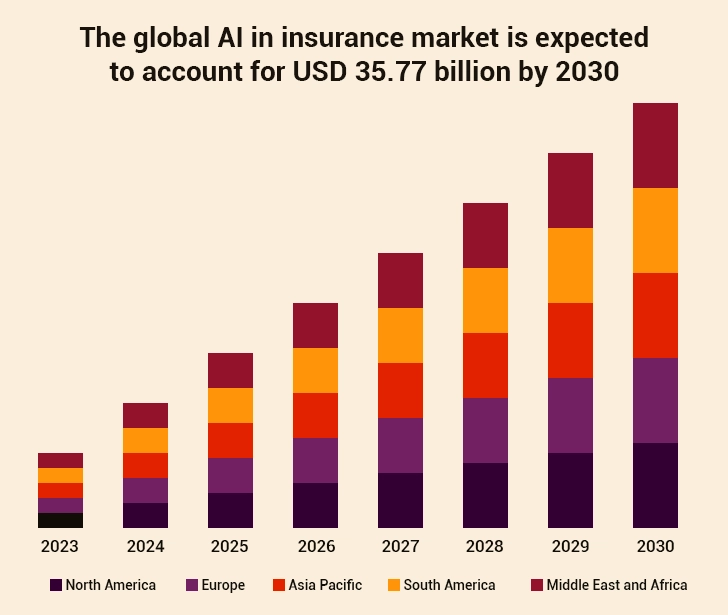

Without any doubt, the insurance industry has embraced AI. And with the global AI in insurance market set to reach USD 36 billion by 2030, it’s expected that its applications will continue to expand even further.

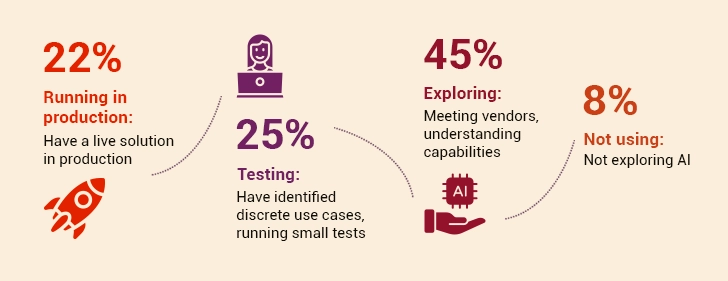

Despite this prediction, around 45% of insurers who have prioritized AI still need guidance on how to deploy. But first things first.

Here are the most common AI implementations you’re likely seeing (or practicing):

1. Underwriting and Risk Assessment

AI systems running on ML algorithms leverage large datasets to thoroughly assess risk profiles. From analyzing credit scores and driving records to IoT sensor data, they make way for improved policy pricing and coverage decisions.

2. Claims Processing

AI-led systems come in handy when it comes to claims processing. They can be made to assess damage from photos or detect fraud patterns. On a broader level, they’re often used to streamline the entire claims processing workflow. Computer vision technologies are a noteworthy mention here. They are used for evaluating property damage. And NLP is used for initial claim intake and documentation processes.

3. Customer Service

On the customer service front, many insurers use chatbots for regular inquiries. Some of these chatbots may be autonomous, like Salesforce Agentforce for instance. They operate autonomously and provide real-time responses to customers. Smart insurers still prefer to retain human agents for resolving complicated queries.

4. Fraud Detection

AI systems identify fraud patterns in claims data. Manual review processes might miss what strong AI systems can sense. Hence, it helps reduce fraudulent payouts.

Generative AI tools, in particular, have shown great results in document processing, contract analysis, and customer communications. These systems come in handy when:

- Summarizing complex policy documents

- Generating personalized communications

- Drafting initial claim assessments

However, we all need a reality check from time to time.

- Is AI always able to meet your expectations?

- Can it be fully trusted in all scenarios?

- Or are we becoming too reliant on technology that doesn’t always act in a human context?

This brings us to the next section. Find out where exactly AI may miss the mark.

Where Trust Breaks: The Downside of Unsupervised AI

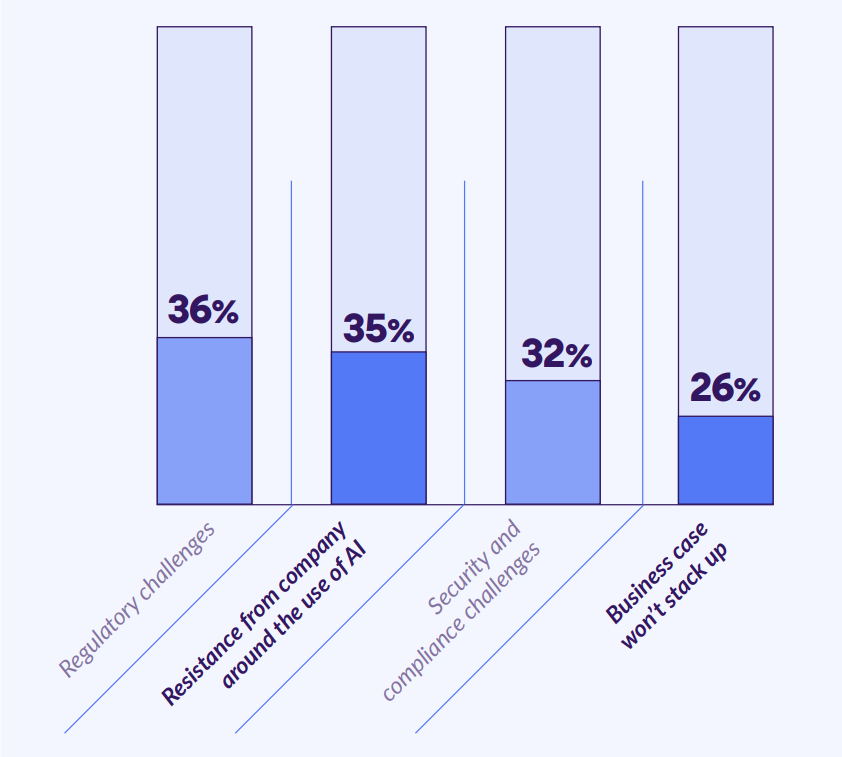

Rapid AI implementation without proper oversight opens doors to potential risks; legal, ethical, and reputational. This is a big deal in the insurance industry. 70% CEOs believe that the lack of current AI regulation within the insurance industry could become a barrier to the organization’s success. Here are some of these risks:

I. AI Hallucinations in Critical Decisions

Perhaps the most concerning risk is when AI generates false information. For example, in claims assessment, AI may misinterpret the cause of damage or even miscalculate repair costs. These are called “hallucinations”. Therefore, unsupervised AI programs cannot always be trusted. There’s a high chance that they may negatively impact customers’ trust. In addition to that, it also raises a lot of questions about the ethical usage of AI.

II. Bias in Underwriting Algorithms

AI systems learn from historical data. Now, this data can potentially include biases. This can result in inaccurate pricing (for biased customer segments) or coverage decisions based on sensitive human characteristics like race, gender, or geographic location. Even when these factors aren’t explicitly programmed, algorithms can pick up proxy variables leading to the same issue.

For instance, an AI underwriting model may learn to associate certain ZIP codes with higher claim risks. If those ZIP codes predominantly house minority communities, applicants from these areas may face higher premiums or denial of coverage.

III. Lack of Transparency in Pricing Decisions

Modern AI systems often operate as “black boxes”. In simple words, this means the decision-making process is not clear. For instance, when customers can’t comprehend why they’re being offered a specific premium, they instantly start questioning the parameters/factors that influence the decision. Eventually, it directly erodes customer trust in the insurance company. From a company standpoint, this lack of transparency also makes it difficult to identify and correct biases (if any).

IV. Data Security, Legal, and Reputational Concerns

Poor data governance can lead to privacy breaches, unauthorized data sharing, or misuse of sensitive customer information. The irony is that the cost of all these consequences often far exceeds the savings from AI implementation.

Navigating the Compliance Landscape: What You Must Know

The regulations are always changing, a little too fast for insurers to catch up. Hence, understanding a few mandatory frameworks is the key here:

1. NAIC Model Bulletin on Use of AI

24 states have adopted the National Association of Insurance Commissioners (NAIC) Model Bulletin on the use of AI systems by insurers. Here’s what the report says:

- Insurers must notify consumers when AI systems are in use.

- Insurers should develop, implement, and maintain a written program for responsible AI adoption.

- Decisions impacting consumers that are made or supported by advanced analytical and computational technologies, including AI, must comply with all applicable insurance laws and regulations, including unfair trade practices.

2. State & Federal Regulations

Most of the states regulating AI address common concerns by imposing guardrails. This helps minimize potential inaccuracies, unfair discrimination, data vulnerability, lack of transparency, and the risk of reliance on third-party vendors. Each state may have specific requirements for AI governance, testing, and reporting. It’s important to follow these rules.

While insurance regulation in the U.S. is largely driven by state authorities, federal interest in AI governance is growing. The NAIC has urged the U.S. Senate to reject the 10-year moratorium on state AI regulation, warning that it could curtail states’ ability to oversee commonplace tools used for underwriting, claims processing, and pricing. The NAIC emphasized that an overly broad federal freeze could conflict with state authority under the McCarran-Ferguson Act, create regulatory uncertainty, and potentially weaken consumer protection frameworks.

3. International Frameworks

If you operate globally, you must also consider regulations like the EU Artificial Intelligence (AI) Act. It adopts a risk-based framework: high-risk systems—such as those used in underwriting or claims automation—are subject to strict transparency, documentation, and human?in?the?loop requirements. This aligns with trends in other jurisdictions like the UK’s AI Safety Institute proposals, Canada’s Directive on Automated Decision?Making, and Singapore’s Model AI Governance Framework. Hence, to operate globally, insurers should use AI systems that are as technically advanced as they’re compliant with regulations across jurisdictions.

On a broader level, we’ve observed that regulators focus on three primary areas:

| ExplainabilityYou must be able to explain how AI systems reach decisions, particularly those affecting customer outcomes. | FairnessYour AI systems must not be partial against protected classes or create unfair market advantages. | AuditabilityYou must maintain comprehensive documentation of AI system development, testing, and deployment. |

How to Build a Responsible AI Framework?

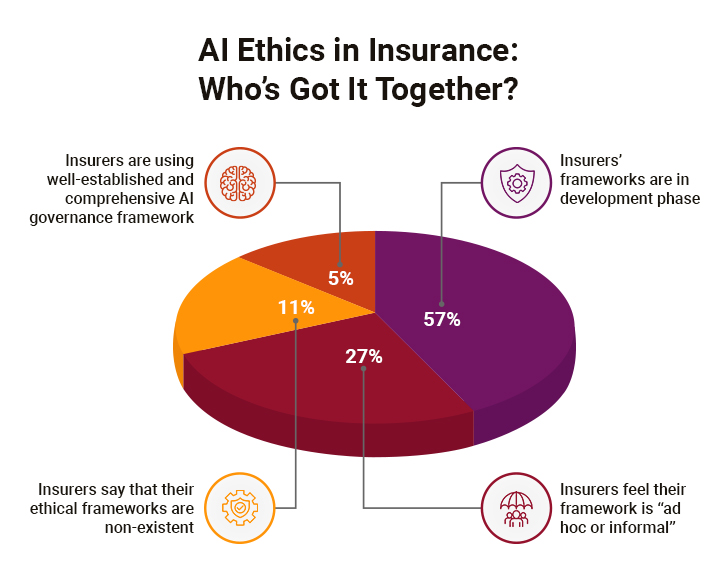

Creating a responsible AI framework means embedding ethical considerations into every aspect of your AI and insurance lifecycle. As per a report, 84% of health insurers utilize AI in their organization. But do they give weight to AI governance? As per a study, implementing ethical frameworks remain a work in progress for many insurers:

Now, let’s see how you can build a strong responsible AI framework.

I. Get Your Core Principles Right

Your AI governance should be built on three fundamental principles.

- Fairness: As per our experience, many insurance companies tested their AI systems and still found bias, even when they thought it was fixed. This underlines the ongoing battle of achieving true fairness in AI systems. No matter what, this should not be overlooked. AI systems must treat all customers equitably and avoid discriminatory outcomes.

- Accountability: Define ownership of AI-related decisions and outcomes. We have seen that insurers that have a dedicated AI ethics board see lesser bias-related incidents.

- Transparency: Stakeholders must understand how AI systems work and affect them. Organizations often try to use AI almost everywhere without understanding its full capabilities and limitations. This highlights the critical need for transparent AI implementation.

II. Keep Humans in the Loop

Go for a process where humans and AI can work together. Of course, you need to ensure that humans have control over critical decisions. The insurance industry has seen measurable benefits from this approach. AI systems that recommend actions but require human approval show much better results in complex underwriting decisions. Additionally, a well-laid-out escalation process plays a major role in improving customer experiences.

III. Invest in a Comprehensive AI Governance Structure

Establish cross-functional teams that include multiple stakeholders. Leading insurance companies typically structure their AI governance as follows:

- AI Ethics Board: Senior executives who set AI strategy and policy (recommended size: 5-7 members including CEO, CTO, Chief Risk Officer, and Chief Compliance Officer)

- Technical Teams: Data scientists and engineers who build and maintain AI systems (average ratio: 1 AI governance specialist per 10 technical staff)

- Legal and Compliance: Attorneys who ensure regulatory compliance (83% of insurers have dedicated AI compliance specialists as of 2025)

- Business Units: Stakeholders who understand operational impacts (recommended representation from all major business lines)

- Risk Management: Professionals who assess and mitigate AI-related risks (average budget allocation: 12-15% of total AI investment)

IV. Manage Third-Party Vendors

If you use third-party AI solutions, establish rigorous vendor management processes. The complexity of AI vendor relationships has significantly increased. And here’s how you need to manage them:

- Due Diligence Requirements: Conduct a comprehensive vendor AI governance assessment, including bias testing protocols, explainability capabilities, and regulatory compliance documentation.

- Contractual Obligations: Chalk out specific requirements for explainability and bias testing. Insurance companies now require monthly bias testing reports from AI vendors.

- Regular Audits: Carry out quarterly technical audits of vendor AI systems, with an annual comprehensive review which includes bias testing, performance validation, and regulatory compliance checks.

- Liability Allocation: Make sure that your vendor takes ownership of AI-related issues. Ensure that your contract covers this point; it’s a must.

V. Strictly Adhering to Regulatory Guidelines

Your AI governance framework must align with regulatory requirements. With the European Union’s AI Act, which came into force in August 2024, classifying AI systems by their risk levels and imposing stringent regulations, particularly for high-risk systems, global compliance has become increasingly complex.

AI systems used in critical functions like underwriting, claims assessment, fraud detection, and credit scoring are likely to fall under the “high-risk” category. These systems must meet rigorous requirements related to transparency, human oversight, cybersecurity, data quality, bias mitigation, and explainability.

Now, let’s see how you can strike the right balance between effectively using AI and being responsible.

AI Ethics in Claims Management

So, How to Strike the Right Balance?

A well-thought-out AI implementation can determine long-term success for insurers. Here are three strategies that’ll help you leverage AI while maintaining ethical standards and regulatory compliance.

Strategy #1: Use-Case Prioritization

The foundation of responsible AI implementation lies in strategic use-case prioritization. Successful insurance leaders focus more on applications of AI that deliver measurable value.

I. Segment Use-Cases Based on Risk

Insurance leaders should categorize AI use cases into three distinct risk tiers. High-risk applications include those directly impacting customer coverage decisions… like automated underwriting for complex policies or claims denial recommendations. These require extensive human oversight and regulatory review. Medium-risk applications that cover customer service chatbots and document processing, where errors cause inconvenience rather than financial harm. Lastly, low-risk applications that might include internal operational analytics and fraud detection support systems.

II. Evaluate the Value of AI Implementation

Each AI implementation should begin with a critical evaluation across four key dimensions: business impact, technical feasibility, regulatory complexity, and customer trust implications. Organizations effectively leveraging AI can massively boost productivity, but this potential must be weighed against implementation costs and compliance requirements. Furthermore, consider implementing it in phases for better results.

Strategy #2: Bias Mitigation Through Comprehensive Testing

As AI analyzes historical data, it increases the risk of amplifying existing biases. Comprehensive scenario testing represents a critical defense against this. Develop testing protocols to validate AI model behavior across diverse demographic groups, geographic regions, and economic conditions. Implement bias detection techniques, such as fairness audits and adversarial testing to identify hidden biases in AI systems. Bring data scientists, legal experts, compliance officers, and customer advocates together to evaluate testing results. In addition, ensure that your testing scenarios align with current regulations.

Strategy #3: Transparent AI Model Training and Deployment

Transparency in AI model development and deployment creates accountability while building customer trust. Design AI systems with inherent explainability from the ground up. Prioritize architectures that provide clear reasoning paths. Maintain detailed records of model training data, feature selection rationales, validation procedures, and deployment decisions. Consider including data lineage, model version control, and performance monitoring results in your reports.

Create customer-facing documents to describe how AI influences their insurance experience. Prepare technical documentation for regulators that shows compliance with fairness and accuracy requirements. Further, train your customer service representatives to explain AI-driven decisions in simple terms.

Most importantly, don’t ignore monitoring your AI’s performance regularly. Track key metrics such as prediction accuracy, fairness indicators, and customer satisfaction scores. Regular validation ensures models continue performing as intended and identify when retraining or recalibration becomes necessary.

Ending Notes

As we have explored, innovation and ethical responsibility must go hand in hand. As an insurer, you need to wholeheartedly commit to ethical AI implementation through strategic use-case prioritization, comprehensive bias testing, and transparent model deployment. Insurers who invest in responsible AI implementation will survive the trust paradox and emerge as trusted insurance partners in the years to come. Consulting with a partner that is an expert in AI implementation and understands the insurance landscape can accelerate this journey and ensure you start on the right foot.