Workforce shortages plague the insurance industry. Today, most insurance executives see these shortages as a major roadblock to managing claims efficiently. This staffing challenge, combined with rising claims complexity, has pushed insurers toward artificial intelligence solutions faster than many anticipated.

There’s no doubt that AI brings impressive speed to claims processing, leading to more efficient operations. Yet, this rapid shift toward algorithm-driven claims management creates a rift between efficiency and fairness. When machines determine how much compensation a policyholder receives, transparency becomes vital.

Claims automation presents both opportunities and responsibilities. Automated systems outpace human adjusters in analyzing thousands of data points. However, the “black box” nature of many algorithms raises concerns. Who ensures fairness when decisions are made behind a veil of code? How can accountability exist without visibility? These questions matter deeply for regulatory compliance and customer trust.

This article explains how insurers can implement ethical AI in claims management without sacrificing efficiency. It also talks about the technical frameworks and practical approaches that enable transparent, explainable AI systems.

Table of Contents

The Shift Toward AI in Claims Management System

Risks of Opaque Systems in High-Stakes Environments

What Transparent and Explainable Claims AI Looks Like

Regulatory and Compliance Frameworks in 2025

Designing for AI Ethics in Claims: A Technical View

The Shift Toward AI in Claims Management Systems

Insurance claims processing has always demanded extensive manual work. Earlier, companies needed people at every step, from gathering customer documents to evaluating claims and sending payments. This landscape is changing fast as insurers embrace AI across their claims ecosystems.

The insurance sector has reached a turning point. Insurance companies are implementing AI solutions to modernize their operations. This change comes from growing challenges: workforce shortages, increasingly complex claims, and customers who expect quick service from their insurance provider.

AI makes claims management easier through several technologies:

- Automated Document Processing: Optical character recognition and natural language processing technologies automatically extract relevant information from claim submissions. These often include documents like medical reports, police reports, and witness statements.

- Enhanced Fraud Detection: AI algorithms analyze claims data to identify suspicious patterns and anomalies that might indicate fraudulent activity. This potentially saves the industry billions.

- Accelerated Claims Assessment: Computer vision algorithms analyze photos of damaged vehicles or properties, compare them to thousands of similar cases, and make quick, accurate decisions about claim validity.

Real-life examples show remarkable results. Compensa Poland has deployed an AI-based self-service claims system that boosts cost efficiency by 73% while improving customer satisfaction. Similarly, CCC Intelligent Solutions uses AI to analyze accident photos and assess the severity of impact.

The trajectory looks clear. AI will handle most of the claims triage in the next few years. Automation will replace nearly all tasks in claims management, making the process more efficient. IoT sensors and data-capture technologies will detect and report accidents, replacing paperwork-heavy methods.

Yet challenges remain. Data quality issues plague many implementations. Legacy systems resist integration. Regulatory concerns also loom large. In addition, many claims professionals admit they don’t feel confident using modern AI tools.

With AI, insurance companies gain more than just better operations. AI helps human adjusters focus on complex claims where emotional intelligence and personal judgment truly matter. In the future, AI will free up teams to tackle creative problem-solving, meaningful interactions, and other high-value work that only humans can do.

Risks of Opaque Systems in High-Stakes Environments

As AI claims management systems become deeply embedded in claims operations, many operate as “black boxes”. They make decisions through mysterious processes. This opacity creates substantial risks when every decision affects a policyholder’s future.

Chip Merlin, founder of Merlin Law Group, puts it well, “The introduction of AI into this equation, if not properly monitored and regulated, could accelerate a trend where technology is used not to enhance customer service but to reduce claim approvals under the guise of efficiency.”

1. Black-Box Algorithms and Lack of Explainability

Many AI systems operate as “black boxes”—their internal workings stay hidden. Nobody can understand how these systems make decisions. This matters tremendously in claims management where AI rulings decide whether someone can repair their home or access urgent medical care.

Modern AI algorithms work in complex ways. Machine learning models examine unstructured information to find patterns and then classify or predict outcomes based on these patterns. These models often contain multiple layers of calculations and hidden correlations. That’s why it is difficult to understand what’s driving their decisions.

When AI denies a claim or raises a premium, customers naturally want to know why. However, many systems simply cannot provide this explanation. Third-party vendors often say their algorithms are secret, further muddying the waters. Even the insurance companies frequently don’t understand how their AI makes certain choices.

A recent CEO Outlook Survey shows that 57% of business leaders worry about AI’s ethical challenges. These concerns make perfect sense. Claims decisions directly affect people’s financial security, access to healthcare, and ability to recover from disasters.

2. Ethical Dilemma: Speed vs. Fairness

The rush toward AI creates a fundamental conflict: speed on one side and fairness on the other. Sophisticated AI systems process claims rapidly. However, their hidden decision-making raises serious questions about equity.

Without transparency, AI algorithms may perpetuate biases baked into historical data. This leads to unfair outcomes in pricing, coverage decisions, and claims processing. If an insurer’s data favors certain groups, AI algorithms will unknowingly amplify these patterns.

Balancing speed and fairness remains a critical challenge. Around 44% of consumers don’t trust AI-based damage assessments as much as those done by human inspectors. This highlights a trust gap that needs to be closed.

Without careful planning and oversight, claims automation might violate policyholders’ rights. Building transparent, explainable AI systems, therefore, isn’t just a technical challenge—it’s an ethical necessity.

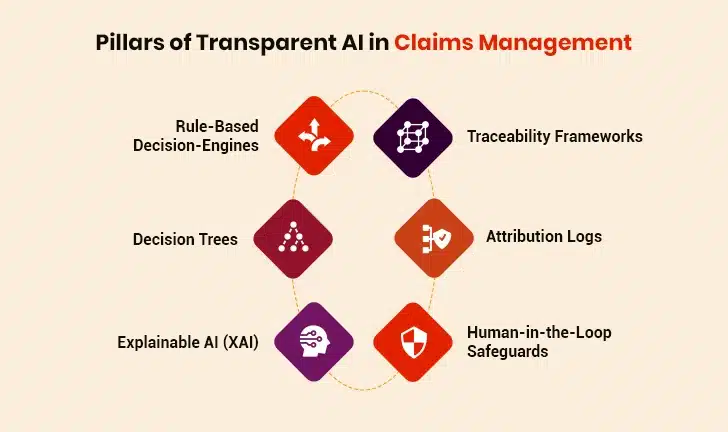

What Transparent and Explainable Claims AI Looks Like

AI in claims management needs specific approaches that turn complex algorithms into systems people can understand. Insurance companies must build AI systems that work efficiently but remain accountable. This makes transparency more than just a box to check.

1. Interpretable Models: Rule-Based AI and Decision Trees

Rule-based decision engines are built for transparency. These engines make claim decisions by examining all possible inputs and then deciding the next steps. Because every decision is tied to clear logic, one can easily trace how and why a conclusion was reached.

Decision trees offer another straightforward approach, creating visual pathways where each branch represents a decision that’s based on specific factors. The beauty of these models lies in their simplicity: they resemble flowcharts anyone can easily follow.

Model interpretability lets stakeholders see how an AI model makes its predictions. It shows which variables matter and how they affect outcomes.

2. Use of LLMs and Traceability Frameworks

Explainable AI (XAI) involves processes and methods that aim to explain, in simple terms, how an AI model makes decisions. This way, end-users (e.g., policyholders) can trust and verify its results.

Large Language Models bring another layer of clarity through attention mechanisms that highlight the most important information in insurance claims: dates, damage descriptions, or policy terms. To keep things transparent, many AI systems use:

- Traceability frameworks that track how AI decisions are made

- Model cards that explain what the AI can or can’t do

- Attribution logs that record the exact reasons behind decisions (e.g., claim denied due to missing proof of loss)

AI in Insurance: A Catalyst for Industry-Wide Transformation

3. Human-in-the-Loop Safeguards

Human-in-the-loop (HITL) systems provide essential oversight in claims automation. They place human decision-makers at strategic points in AI workflows. For this approach to work well, companies must:

- Define clearly who provides oversight and what skills they need

- Build feedback loops so human corrections improve the AI over time

- Create guardrails against human bias, since people bring their own biases too

When human reviewers validate AI-generated outputs, it provides fast and accurate results while building trust. In fraud detection, for example, AI spots suspicious patterns while humans make final decisions based on context that computers might miss.

Regulatory and Compliance Frameworks in 2025

The rules governing AI in claims management shift constantly. Today, insurance companies must navigate a web of regulations that differ by region but share the core principles of transparency, fairness, and accountability.

1. EU AI Act, NAIC Guidelines, and Emerging Norms in Asia-Pacific

Today, American insurers face growing oversight through the National Association of Insurance Commissioners (NAIC) Model Bulletin on AI Usage. This bulletin sets clear expectations for insurers that use AI systems. Companies must create, put in place, and maintain a written AI program that covers:

- Robust governance frameworks

- Risk management controls

- Internal audit and oversight mechanisms

The European Union takes a more rigid approach with its AI Act. Unlike the American model, the EU classifies AI systems by risk level. This matters deeply for insurers since algorithms used for risk assessment and pricing in life and health insurance fall squarely into the “high-risk” category. The stakes couldn’t be higher, as violations could cost companies millions.

Asia-Pacific presents a fragmented regulatory landscape. China has enacted specific AI regulations while Singapore and Japan prefer voluntary frameworks and guidelines. By 2025, however, most APAC jurisdictions are moving toward risk-based approaches that mirror the EU model, creating greater global consistency.

2. How Modern Claims Software Vendors Are Adapting

Claims technology providers have adapted to these regulatory changes. They’ve updated their contracts and technical systems. Vendor agreements have evolved significantly, moving away from pushing compliance burdens onto customers toward shared accountability models.

Today’s claims platforms incorporate built-in compliance tools such as:

- Traceability frameworks that document every decision path

- Automated compliance monitoring that flags potential violations

- Real-time regulatory tracking that updates as laws change

Many vendors now offer AI governance dashboards that help insurers validate compliance with region-specific regulations through standardized testing and documentation. These tools transform regulatory burdens into manageable processes.

As regulations mature, the distinction between compliant and non-compliant systems grows starker. Insurance companies who choose vendors with robust regulatory frameworks gain both peace of mind and competitive advantage.

Drive Innovation with AI-Enabled Solutions

Designing for AI Ethics in Claims: A Technical View

Ethical AI systems don’t emerge by accident. They’re built deliberately with technical foundations that support transparency from the ground up. Beyond ticking regulatory boxes, claims systems must embed ethics directly into their architecture through specific design choices and operational safeguards.

1. System Architecture Principles: Modularity, Audit Logging, and Override Mechanisms

Effective claims systems implement a modular architecture. This allows teams to update, replace, or test components (e.g., fraud detection modules) without overhauling the entire system.

AI audit logs are non-negotiable. These logs record all AI activities and decisions within the system. They create transparency by documenting how AI processes claims, what data it accesses, and which rules it applies. Studies show that simply knowing audits exist makes employees much more likely to follow AI guidelines.

Override mechanisms are another key architectural feature that allows human experts to step in when needed. This includes clearly defined escalation paths for complex or potentially problematic claims decisions that maintain human judgment in high-risk scenarios.

2. Data Drift Detection, Bias Monitoring, Incident Handling Protocols

Most machine learning models lose accuracy over time. This deterioration, known as model drift, happens as real-world data patterns shift away from the training data distribution.

To address the issue, claims management solutions must utilize:

- Statistical drift detection that compares data distributions

- Model-based detection that compares predictions against baseline performance

- Real-time monitoring that compares live data with training data

Time-based analysis helps identify when drift (e.g., seasonal spikes in claims) occurred during system operation. This enables precise corrective action. Additionally, traditional incident response approaches don’t work well for AI systems, so specialized incident handling protocols may be needed.

3. Role of MLOps in Insurance Tech Stacks

MLOps (Machine Learning Operations) provides the framework that manages AI models throughout their lifecycle. Modern insurers are investing heavily in MLOps because it helps scale AI efficiently.

This discipline combines DevOps principles with machine learning governance to keep models accurate, unbiased, and compliant. Effective model versioning helps organizations track changes and provide clear audit trails for regulatory purposes. As claims processes become more automated, MLOps practices help insurers maintain control while expanding their AI capabilities.

Building Trust with Policyholders and Regulators

Trust is the foundation of successful claims technology adoption. Technical capability alone isn’t enough. Companies must earn trust through consistent transparency and clear communication about how AI systems reach certain decisions.

1. Transparency as a Competitive Advantage

Insurers that prioritize transparency in claims management solutions see measurable business benefits. Customers are far more likely to stay loyal to companies that openly explain how AI handles claims, compared to those using opaque systems. Transparency has thus become a true market differentiator instead of just a regulatory requirement.

Several forward-thinking insurers now frame AI transparency as part of their core value proposition rather than treating it as merely a compliance exercise. This strategic approach helps them stand out in a market that’s becoming more commoditized daily.

2. Embedding Transparency into User Experience

Modern claims management systems build transparency right into their user experience through:

- Confidence indicators: Visual representations showing the AI’s certainty level about specific decisions

- Decision paths visualization: Interactive flowcharts revealing the logic behind approvals or denials

- Instant appeal options: One-click pathways for policyholders to challenge automated decisions

These elements build trust while reducing support queries significantly. Policyholders understand decision rationales better without requiring human explanation.

3. Personalized Explainability as the Future of Claims Automation

Advanced claims management solutions are now offering tailored explanations that match each policyholder’s unique needs. These systems generate natural language explanations about claim decisions based on customer context and communication preferences. These solutions also adapt their communication style based on policyholder profiles and past interactions, creating highly relevant explanations.

Claims management systems will soon feature two-way conversations where policyholders can ask follow-up questions about the decisions made. This transparency will mirror human adjuster interactions effectively.

Summing Up: Claims Automation Without Compromise

The future of insurance operations hinges on ethical AI implementation. Insurers adopting transparent AI systems gain more than regulatory compliance—they earn genuine policyholder trust that boosts retention and satisfaction.

Success in AI-driven claims process optimization depends on balancing technical ability and human judgment. Collaboration with a trusted insurtech provider like Damco Solutions helps businesses merge cutting-edge technology with human expertise and boost efficiency while upholding fairness and transparency. Experts at Damco empower insurers to build explainable AI systems that drive both regulatory compliance and market differentiation.

Going forward, claims platforms that make transparency a core design principle will lead the way. Policyholders who understand their claims decisions and trust the process will create better relationships with insurers. Though technology continues advancing rapidly, ethical principles must remain constant. These principles guide how AI serves both insurers and customers through clear, explainable claims management solutions.