What if we told you that the difference between AI success and failure isn’t in your algorithm, data volume, or computing power, but in how well you label your training data?

Most companies chase the wrong metrics when their AI models falter or don’t perform up to the mark. They seek bigger datasets, more advanced algorithms, and faster processors. But they usually overlook data annotation accuracy, which is the determining factor. For instance, a medical AI model trained on imprecisely labeled X-ray images will misdiagnose, regardless of computational power.

Take another case where an autonomous vehicle’s object detection fails not because of insufficient data volume, but due to inconsistent labeling of pedestrians versus cyclists. Given these examples, it is right to say that edge cases, annotation consistency, and domain expertise matter more than raw scale. So, companies that understand this aspect shift their focus from quantity to quality data annotation. The result? Breakthrough performance with smaller, meticulously labeled datasets.

Table of Contents

Why Is Data Annotation in Machine Learning an Important Prerequisite?

What Is the True Cost of Poor-Quality Data Annotation in Machine Learning?

What Are Some of the Advanced Data Annotation Strategies?

What Is the Significance of Data Annotation Governance Framework?

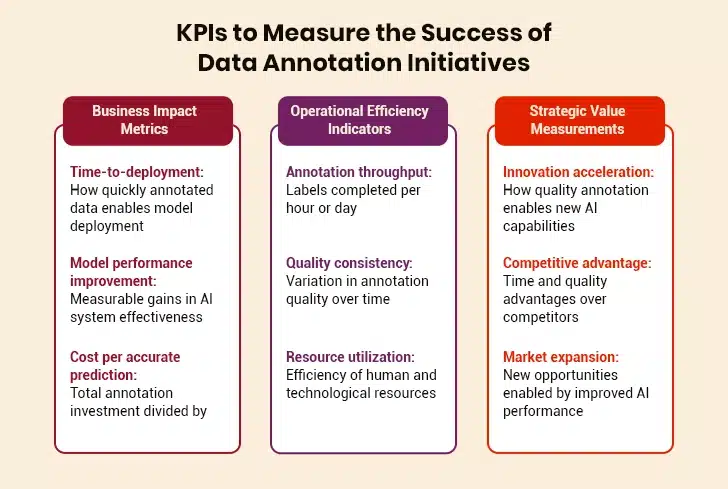

What Are the Quality Assurance Metrics in Data Annotation?

What Are the Industry-Specific Annotation Challenges and Their Solutions?

Which Is the Right Approach in Data Annotation: Building vs. Buying?

Why Is Data Annotation in Machine Learning an Important Prerequisite?

Data annotation is the process of labeling data, and is necessary to train machine learning algorithms. This data can be present in various forms, such as text, videos, images, social media content, etc. Here, labeling is done using tags, which help AI-based models compute attributes easily. In simple words, data annotation in machine learning is done to make the object of interest detectable and identifiable.

Some data annotation techniques include semantic segmentation, lines and splines, bounding boxes, 3D cuboid annotation, polygons, phrase chunking, text categorization, entity linking, and more.

While technical aspects of data annotation are important, the business implications often determine project success and failure. That said, let’s examine the real costs associated with poor data quality and why investing in annotation services delivers measurable returns.

What Is the True Cost of Poor-Quality Data Annotation in Machine Learning?

Understanding the repercussions of using inaccurately labeled data is important for decision-making in regard to AI and ML models. In addition to affecting the model’s performance, inadequate data annotation also impacts the company’s bottom line. Not only do the efforts go in vain, but companies have to repeat the process from scratch. There’s more to the consequences of poor data annotation; let’s explore them one -by one:

1.Financial Impact of Inaccurate Models

Poor data quality can easily chop down the profits of any enterprise. Think of a scenario where the recommendation engine of an ecommerce website fails to generate accurate insights due to mislabeled products. Using this failed model is even more devastating, giving rise to issues, including decreased sales, poor customer experience, and damaged brand reputation. And, we are sure no business can afford such losses at any cost.

2. Real-World Consequences

The costs extend beyond immediate financial losses. Companies face delayed product launches and increased development cycle time. In sectors like healthcare and automobile, poor annotation quality can have consequences that can affect one’s life, leading to scrutiny and potential lawsuits.

3. Missed Business Opportunities

Lastly, if you think that the quick and cheap annotation approach is right, then we suggest that you reconsider every move. If you don’t do so, you could end up costing 10x more than doing it right the first time. Budget-conscious teams often choose the lowest-cost annotation providers, assuming they can fix quality issues later. This creates a hidden expense trap: poorly annotated datasets require complete re-labeling, model retraining, and delayed product launches. Not to forget the cascading costs, which include missed market windows and damaged customer trust.

Moving beyond the business case, it’s essential to understand how modern annotation strategies have evolved to meet complex AI requirements. That’s because advanced approaches that maximize both efficiency and accuracy are required for an AI project to be successful. Let’s explore these more nuanced data annotation strategies in the next section.

What Are Some of the Advanced Data Annotation Strategies?

Simple object detection or text classification no longer meet the needs of modern AI applications. Therefore, annotation approaches need to evolve alongside advancing AI algorithms. These advanced techniques help organizations build more robust and efficient AI systems. Let’s take a closer look at what these data annotation strategies are:

I. Active Learning Approaches

Active learning creates recurring cycles where models identify which examples they’re most uncertain about. Humans then annotate those specific cases, and models retrain and improve. This approach can greatly reduce annotation costs while maintaining model accuracy.

II. Multimodal Annotation Techniques

Multimodal AI systems, such as Gemini and GPT-4 Vision, often process multiple data types simultaneously. And for companies to develop such advanced algorithms, advanced data annotation strategies are a must. These include:

- Cross-modal labeling: Ensuring consistency between text, image, and audio annotations for the same content

- Temporal annotation: Labeling time-series data with context-aware tags

- Hierarchical labeling: Creating nested annotation structures for complex scenarios

III. Handling Edge Cases

Professional annotation teams focus heavily on edge cases: the unusual scenarios that can break AI models in real-world applications. These scenarios might represent only a small part of training data, but account for major model failures in production.

“The key to artificial intelligence has always been the representation.” —Jeff Hawkins, Co-Founder, Palm Computing

At the same time, advanced strategies alone aren’t enough without proper oversight and structure. That’s because successful annotation programs require systematic governance frameworks that ensure consistency, compliance, and continuous improvement. And, this brings us to the next main section: the importance of data annotation governance.

What Is the Significance of Data Annotation Governance Framework?

Having robust governance for data annotation is essential for maintaining quality and compliance at scale. After all, a well-designed governance framework lays the foundation for all annotation activities within a company. Let’s explore the importance of an annotation governance framework in detail:

i. Creating Annotation Standards

Successful companies develop comprehensive annotation guidelines that include:

- Consistency protocols: Ensuring all annotators follow identical labeling conventions

- Quality benchmarks: Setting measurable standards for annotation accuracy

- Review processes: Multi-tier validation systems to catch errors before they impact training

ii. Feedback Loop Implementation

The most effective annotation programs create continuous feedback loops between model performance and annotation quality. When models underperform in specific areas, this information flows back to annotation teams to improve future labeling efforts.

iii. Industry Compliance Considerations

Different sectors have unique requirements. Healthcare annotations must comply with HIPAA regulations, while financial services need to adhere to data privacy laws. A robust governance framework addresses these sector-specific needs from the outset.

However, it is important to note that governance frameworks are only as effective as the quality measures they enforce. Moreover, implementing comprehensive quality assurance requires specific metrics and methodologies that go beyond traditional accuracy measurements. Wondering what these metrics are? Read about them in the next section.

What Are the Quality Assurance Metrics in Data Annotation?

More than a simple accuracy score, measuring annotation quality requires sophisticated metrics. Effective quality assurance combines human oversight with automated validation to ensure consistent, reliable results.

- Inter-Annotator Agreement The gold standard for annotation quality is consistency between different human annotators. High-performing teams achieve 85-95% inter-annotator agreement on well-defined tasks. This metric helps identify areas where annotation guidelines need clarification.

- Automated Quality Checks Modern annotation platforms incorporate AI-powered quality checks that can identify potential errors in real time. These systems flag inconsistencies, outliers, and potential mislabeling for human review.

Continuous Improvement Methodologies Leading organizations implement systematic improvement processes that track quality metrics over time, identify patterns in annotation errors, and continuously refine their approaches based on model performance feedback.

As quality standards continue to rise, the tools and methods for achieving those standards are also quickly advancing. The annotation industry is shifting toward AI-assisted workflows that enhance human expertise, unlike the common misconception that it is replacing it!

Moving on to the next, different industries face unique challenges that require specialized annotation approaches. Understanding these requirements is important for building effective AI data annotation solutions that meet industry standards and regulatory requirements. Explore the issues unique to each industry in the next section.

What Are the Industry-Specific Annotation Challenges and Their Solutions?

Different industries face unique annotation challenges that require specialized approaches and expertise. Success in these sectors depends on understanding both technical requirements and regulatory landscapes.

A. Precision in Life-Critical Applications in Healthcare

Medical annotation requires specialized knowledge and extreme precision. Radiological image annotation, for instance, needs certified medical professionals who understand anatomical structures and pathological indicators. Privacy compliance adds another layer of complexity, requiring secure annotation environments and strict data handling protocols.

The Critical Role of Medical Data Annotation

B. Safety-Critical Scenarios in Autonomous Vehicles

Automotive AI annotation involves complex sensor fusion data, including LiDAR, camera feeds, and radar inputs. Annotators must label objects in 3D space while considering motion dynamics and potential safety scenarios. The challenge lies in annotating rare but critical events like emergency situations and unusual road conditions.

C. Regulatory Compliance and Fraud Detection in Financial Services

Financial annotation requires a thorough understanding of complex document structures, regulatory requirements, and fraud patterns. Annotators must be trained to recognize subtle indicators while maintaining strict confidentiality and compliance with financial regulations.

Once you understand industry requirements, the next important decision involves finding the optimal approach for your organization. Whether to build internal capabilities or partner with external providers depends on multiple strategic and operational factors.

Which Is the Right Approach in Data Annotation: Building vs. Buying?

Choosing between in-house annotation capabilities and outsourcing requires careful consideration of multiple factors. This decision impacts both short-term costs and long-term strategic flexibility. And, if you’re stuck in the loop, here are the factors that can help you make the right move:

| When to Build In-House | When to Outsource |

|---|---|

| Data has highly sensitive or proprietary information | Access to specialized tools and expertise without investment is required |

| Business requires extremely specialized domain knowledge | Business needs to scale quickly without long hiring cycles |

| Annotation requirements are ongoing and predictable | Annotation requirements are project-based or variable |

| Company has sufficient budget for hiring and training specialized teams | Cost optimization is a priority |

Hybrid Approach

Many companies whose AI and ML initiatives are successful adopt hybrid approaches. This implies maintaining the core annotation capabilities in-house while outsourcing overflow work or specialized tasks. This is because the hybrid approach provides flexibility while maintaining control over critical processes.

Having explored advanced strategies, it’s important to return to the practical considerations that many organizations face today. The decision between building internal capabilities and outsourcing remains a critical choice that affects both operational efficiency and strategic flexibility. To know which is the “best” for you, we suggest you explore the major benefits of outsourcing your data annotation project, as discussed in the next section.

Why Enterprises Are Outsourcing Data Annotation: A Cost vs Quality Breakdown

Why Is Outsourcing Data Support for AI/ML a Viable Option?

Associating with an experienced data annotation company allows businesses to leverage the combined capabilities of skilled human annotators and AI-powered data labeling tools. This strategic collaboration enables businesses to achieve new levels of agility and accelerate their AI/ML model development, which in turn drives greater operational excellence. However, all this makes sense if a business opts to outsource data annotation services. That’s because performing annotation tasks in-house is a costly avenue until the core business competency is AI solutions.

Besides, hiring an in-house team to train AI/ML solutions can drain resources for numerous companies. Leveraging professional services for data annotation is, thus, a comparatively intelligent alternative to optimize costs and drive maximum efficiency. Businesses can reap numerous advantages as listed below:

1. Domain-Specific Workflows

Professional providers have domain-specific workflows and multi-dimensional perspectives. They also have streamlined business processes, proprietary tools, and proven operational techniques that are essential to ensure industry-compliant data annotation.

These vendors understand their clients’ needs and the AI model’s intended use case. Professional annotators prepare the training datasets accordingly, by using the right-fit tools and labeling techniques. They tailor their operational approach, adhere to strict security protocols, and maintain high standards of data confidentiality to assure excellence in every step.

2. Professional Excellence

Creating a training environment similar to the model’s use case requires the experiential expertise of professional annotators, data scientists, and linguistic experts. The data annotation outsourcing companies have a pool of accredited annotators who create pixel-perfect training datasets while prioritizing the quality of the resultant AI algorithm’s predictions. Businesses can, therefore, get excellent outcomes from their working models.

3. Assured Accuracy

Collecting and processing streams of structured and unstructured data is a challenging task for several organizations because of a lack of model-behavior understanding. This results in unsuccessful attempts to develop enhanced training data sets. In contrast, data annotation service providers prioritize accuracy while adding consistent, high-quality, and precise annotations to the data streams. This, ultimately, accelerates the client’s AI/ML model development and deployment process.

Don’t just go by our opinion. Instead, listen to the facts, which state that the data annotation outsourcing market size is expected to reach USD 3.6 billion by 2031, growing at a CAGR of 33.2%.

How Outsourcing Data Annotation Services Can Supercharge Your AI Model?

Summing Up

The AI-based model is as smart as the data it is fed with; otherwise, it is powerless. So, the key is ‘right training data’ that adds value to the NLP, deep learning algorithms, and computer-vision-based models at a large scale, that too, ‘consistently’. Reputed data annotation companies have the potential to deliver quality results, thereby assisting organizations to explore new business opportunities via AI and ML models.

Investing in the right data annotation solutions enables companies to access steady streams of high-quality, precise, and relevant data training sets to be fed into machine learning algorithms. Subsequently, they gain in-depth insights from voluminous datasets in real-time, scale to great heights in the industry, and ace the competition easily.