Have you ever noticed that every time you drive, your brain processes a constant stream of information? It identifies a stop sign, calculates the speed of a cyclist, notices a traffic light change, and reacts to a sudden lane change. In short, the brain makes thousands of micro decisions in real time!

Autonomous vehicle companies do this not only for an afternoon commute, but for millions of hours of driving footage every single day. They process millions and billions of datasets daily, including road signs, pedestrians, vehicles, traffic patterns, and other data, for training their autopilot systems. This is not the case only for a self-driving car but could be an autonomous drone scanning a city skyline or a robotic warehouse system sorting thousands of packages an hour.

Companies developing autopilot systems, autonomous drones, and warehouse robots require sheer volumes of accurately labeled training datasets to teach these models. To match the speed, scale, and precision required for building such robust AI models, LLM data labeling is the best bet.

But what if this task were left to traditional human annotation? Thousands of annotators would have been working around the clock! That would make the entire process excessively costlier. Not to forget the speed required to process such huge volumes of data in real time! LLM data labeling makes it a lot easier and more effortless to annotate such overwhelming volumes of data.

Moreover, the decisions made by AI and ML models, regardless of their field, depend on high-quality annotated datasets fed into them with almost zero delay. And in such high-speed environments, a few seconds’ lag in annotation can compromise safety, disrupt operations, and delay delivery times. That is why the leaders need to rethink their data labeling workflows.

“Artificial intelligence holds immense promise for tackling some of society’s most pressing challenges, from climate change to healthcare disparities. Let’s leverage AI responsibly to create a more equitable world.”Katherine Gorman, Co-Founder and Executive Producer, Talking Machines

Table of Contents

A Quick Glance at the Current Data Labeling Landscape

Why Are LLMs Suitable for Annotation?

What Are the Use Cases of LLMs in Data Labeling?

What Are the Challenges and Considerations of LLM Annotation?

Why Is Full Automation Driven by LLM Annotation Not Always Optimal?

A Quick Glance at the Current Data Labeling Landscape

To honor the revolutionary approach of LLMs in data labeling, let’s first understand the current scenario. Going back just a few years, data was tagged and labeled manually, often through the human-in-the-loop approach. This was the gold standard in the data labeling industry, as human annotators can understand cultural nuances, interpret ambiguous situations, and apply domain-specific expertise. What’s more is that they can read between the lines in a text, identify minute differences between objects that appear similar, and make sense of complex, unstructured datasets.

While the human-in-the-loop labeling approach is viable for small-scale annotation projects, it may not work well for large-scale projects. That’s because this quality and precision in data labeling come with a price tag. In other words, large-scale projects involve thousands of professional labelers, each requiring onboarding, training, and periodic quality reviews. As such, the data labeling process not only takes an ample amount of time but also becomes prone to errors due to fatigue. Besides, the costs associated with hiring, recruiting, and training seasoned data labelers are skyrocketing.

Another issue in traditional data labeling is balancing speed with quality. Although multiple validation steps, expert reviews, and adherence to rules help ensure accuracy of the labels, these processes extend delivery timelines. And in the race against time, many companies willingly choose to trade off quality. Furthermore, this rushed labeling introduces risks that degrade the AI model’s performance.

While some companies give up on the idea of implementing AI and ML models in their workflows, others who choose to improve the performance of subpar models have to bear the cost of retraining cycles. Another important issue is scalability, which becomes almost unconquerable as datasets grow into millions and billions of points.

Various automated labeling approaches, such as active learning, rule-based systems, crowd-sourcing platforms, traditional machine learning, and more, help overcome these issues, but only to some extent. These tools follow rule-based criteria and lack the ability to understand the nuances of human language. While these guarantee precision, they may not always work well where nuanced understanding is required, especially in areas like legal document processing and scientific nomenclature.

Automated Annotation Tools vs. LLM Annotation Method Comparison

| Aspect | LLM Annotation | Traditional ML | Active Learning | Crowdsourcing Platforms |

|---|---|---|---|---|

| Speed | 20x faster than humans | 3-5x faster than random sampling | Near real-time | 2-3 days typical turnaround |

| Cost | 7x cheaper than humans | 40-60% cost reduction vs full labeling | Lowest operational cost | $0.05-$0.50 per task |

| Quality | 88.4% agreement with ground truth | 85-90% with proper sampling | 70-80% (rule-dependent) | 75-85% with quality controls |

| Scalability | Millions of records/day | Limited by model training cycles | Unlimited but rigid | Limited by workforce availability |

| Setup Time | Hours to days | Weeks for model training | Days to weeks for rule creation | Days for task design |

| Domain Adaptability | High with prompt engineering | Moderate, requires retraining | Low, new rules needed | High with proper instructions |

| Consistency | Very high | High within training distribution | Perfect, rule-based | Variable due to human factors |

| Explainability | Limited (black box) | Model-dependent | Full transparency | Human reasoning available |

| Best Use Cases | Text classification, named entity recognition | Limited labeled data scenarios | Simple pattern matching | Complex subjective tasks |

As is evident, the annotation industry needs a powerful solution to overcome the limitations of speed, scalability, and cost. That’s where LLMs as annotators outshine. Moreover, the global data annotation tools market size is set to register a CAGR of more than 26.2%, exceeding USD 32.54 billion revenue by 2037.

From this figure, it is clear that businesses across industries, such as healthcare, automobile, financial services, and ecommerce, etc., are gearing up to implement AI models in their workflows. That said, LLM-enabled data labeling services will play a key role in training these models. Let’s explore the factors that make LLM the most appropriate candidate for data labeling projects.

Why Are LLMs Suitable for Annotation?

LLMs change the equation when it comes to balancing speed, scale, quality, and cost in the data labeling process. Having undergone massive pre-training, LLMs understand natural language thoroughly and can quickly adapt to project-specific needs. In addition to this, there are factors that build a stronger case for LLMs as annotators. Let’s take a closer look at these factors:

I. Pre-Training Advantage

LLMs understand multiple domains and patterns, as these models are first trained on diverse datasets. The language models learn from millions of text documents, code repositories, academic papers, and web content, among others.

This broad exposure provides them with the detailed knowledge and contextual understanding of natural language, which is invaluable for data annotation tasks. Had the task of labeling immense volumes of data been assigned to a human annotator, they would have had to start from scratch!

II. Unstructured Data Processing Capabilities

Most of the datasets generated today are unstructured, such as social media posts, multimedia files, sensor data, log files, etc. Thanks to the LLM’s ability to handle unstructured data formats, companies can utilize these to build and train multimodal AI. Even more, LLMs can also handle multi-layered contexts that would have otherwise challenged traditional annotation methods.

Thus, whether the task is to process multi-page instruction documents, understand hierarchy and classification, or maintain consistency when labeling large datasets, an LLM-based annotation tool can do it all!

III. Complex Guideline Interpretation

What makes LLMs the most appropriate candidate for the next data labeling project is their ability to understand and apply detailed annotation guidelines that would otherwise require extensive human training.

The annotation guidelines can include multiple criteria, conditional rules, and edge-case handling. Thus, businesses get the dual benefits: reduced onboarding time compared to the ones required for human annotators and assured labeling consistency.

IV. Structured Output Mastery

Machine learning models have to be fed with structured data formats. The LLMs can generate consistent JSON objects, maintain proper formatting standards, and follow the predefined schemas to create the right input sets for ML algorithms. This capability of LLMs is important for applications where downstream systems require specific data structures and formats.

In other words, LLM-based labeling tools help remove an entire step from the data annotation workflow by generating outputs in JSON, XML, and other machine-readable formats. As a result, businesses can directly ingest the labeled datasets produced from LLM annotation tools into AI training pipelines.

Having understood what makes LLM data annotation tools most worthy when training AI models, it is now time to explore their practical use cases. Let’s do this in the next section.

What Are the Use Cases of LLMs in Data Labeling?

The applications of LLM-based annotation are vast and varied, each presenting unique opportunities for automation and efficiency gains. And business leaders and stakeholders familiar with these use cases can easily identify where LLM data annotation can deliver the most value. So, let’s get started:

i. Text Classification and Sentiment Analysis

LLMs can process customer reviews, support tickets, social media posts, and survey responses. They can differentiate between subtle sentiment variations and identify emotional undertones. Even more, they can categorize content across multiple dimensions simultaneously.

LLMs can classify product reviews based on sentiments, feature mentions, and purchase intent in ecommerce platforms. Given this capability, ecommerce businesses can build more advanced recommendation systems and utilize them to optimize customer experience.

ii. Conversational Data Labeling

Extensively annotated chat transcripts, dialogue flows, and customer service interactions are necessary to build high-performing conversational AI models. It is through these datasets that an AI model produces human-like responses. Here, LLMs identify user intent and extract key information from the interactions. They can also classify conversation outcomes and map dialogue states.

This detailed annotation helps with conversational data analysis and is essential to developing chatbots, virtual assistants, and automated customer support systems. Additionally, LLMs can identify conversation quality metrics, emotional states, and escalation triggers.

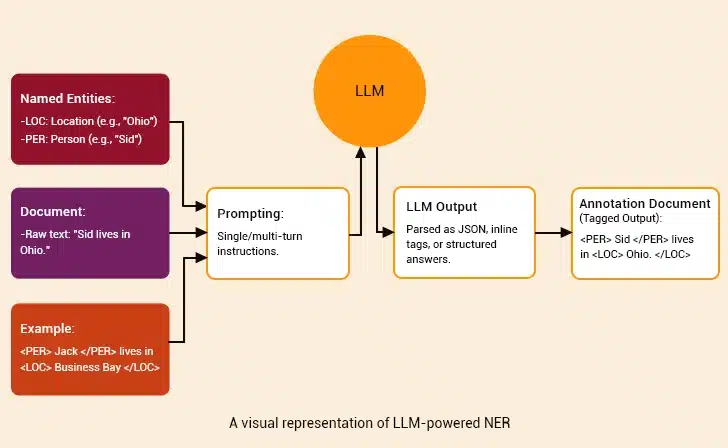

iii. Named Entity Recognition

Named entity recognition is an important natural language processing technique that identifies named entities in datasets. In essence, it includes extracting custom entity types, such as people, organizations, locations, dates, monetary values, and more from data.

An area where NER-based LLM delivers value is legal document processing. Here, LLM annotation tools identify contract parties, dates, financial terms, and regulatory references. Another instance is medical text analysis, where NER-driven LLM is used to extract patient information, identify drugs, and detect diseases.

iv. Multimodal Annotation Support

Multimodal AI is gradually becoming mainstream, and LLMs are the best bet for labeling training datasets for such models. When combined with computer vision models, LLMs can provide detailed image captions and annotate visual content such as images, videos, and graphics with contextual information. They can also create structured metadata for multimedia datasets.

In the context of audio transcription, LLM-based annotation tools can identify speakers and add semantic formatting to the transcriptions. Recent studies show a cost reduction of roughly 10?, turning months of paid annotation into hours of work on one GPU. Such massive efficiency gains in the data labeling process are too huge to be ignored by any company!

These were some of the practical applications where LLM annotation delivers value. However, LLM-based labeling is not without challenges and considerations. The next section provides a closer look at these issues.

Use Cases on Data Labeling Services Powering AI Models

What Are the Challenges and Considerations of LLM Annotation?

LLM annotation is undoubtedly beneficial, yet there are certain limitations. Understanding these roadblocks is important so that leaders can ensure successful implementation and get an assured ROI on their investments. Here we go:

a. Accuracy and Hallucination Risks

LLMs may generate praiseworthy but incorrect annotations at times, particularly in specialized domains or edge cases. These hallucinations can be very minute and difficult to detect through automated quality checks.

For instance, the LLM can misclassify technical terminology in legal documents, or it may incorrectly attribute a sentiment in a sarcastic text. It can also make entity recognition errors in domain-specific content that requires specialized knowledge beyond the model’s training data.

b. Bias in Pre-Trained Models

Bias is an important concern in data labeling, and LLM annotation systems may unintentionally inherit it from the training data. If this goes unnoticed, the bias can multiply. It can manifest in the form of demographic disparities in classification accuracy, cultural insensitivity in content categorization, or systematic errors in entity recognition for underrepresented groups. In short, bias can widen the already existing societal gap.

c. Domain-Specific Knowledge Gaps

Even though LLMs undergo extensive pre-training, they may struggle to label datasets in specialized domains without additional fine-tuning. That is because every industry has different requirements. For instance, a general-purpose LLM often struggles to understand industry-specific jargon, such as medical terminology, legal precedents, scientific nomenclature, etc.

Moreover, industries like financial services, healthcare, and legal applications require domain expertise when labeling datasets. Organizations cannot find such capabilities in off-the-shelf LLMs. Besides, what works for a medical AI doesn’t go with an AI system intended for financial services.

d. Lack of Explainability

LLM annotation decisions often lack transparency. This makes it difficult to understand why specific labels were assigned. Such opacity becomes challenging for compliance-heavy industries where auditable annotation processes are mandatory.

Companies operating under regulatory frameworks must track and document their annotations to maintain accountability for labeling decisions. This is harder when using black-box LLM systems for data processing.

e. Data Privacy Concerns

The most pressing concern is data privacy and security, as sensitive data is processed through third-party LLM APIs. Healthcare records, financial information, and personal data should be handled carefully to maintain compliance with regulations like HIPAA, GDPR, CCPA, and other data protection frameworks.

Furthermore, companies must evaluate the privacy implications of cloud-based LLM data labeling services versus on-premises solutions for sensitive annotation workflows.

The good news is that these challenges can be addressed easily with careful consideration and mitigation strategies. Companies should use bias detection and mitigation strategies to ensure fair and equitable annotation outcomes across diverse datasets and user populations. Most importantly, they should have specialized training and human oversight for accurate annotation results. And this brings us to the next important question: is fully automated data labeling the right move.

Top Challenges in Data Labeling and How to Overcome Them

Why Is Full Automation Driven by LLM Annotation Not Always Optimal?

Despite the compelling use cases and benefits of LLM annotation, complete automation rarely represents the optimal approach for important applications. Understanding when and how to incorporate human oversight ensures annotation quality while maximizing efficiency gains. Let’s discuss this in detail:

The Human-AI Collaboration Framework

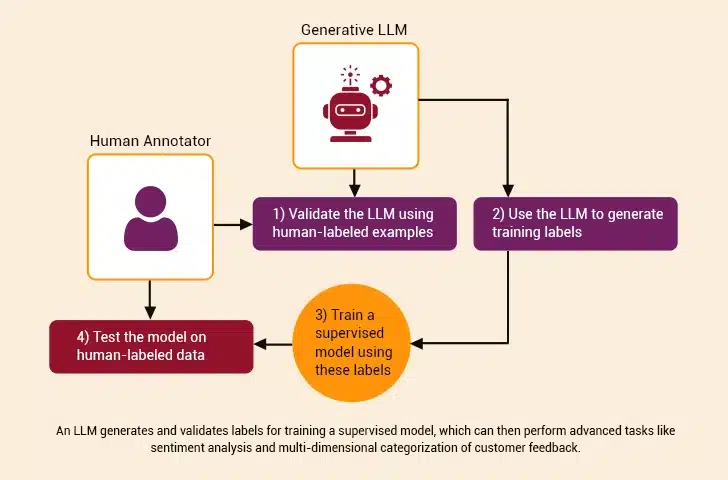

Complete automation, while tempting from an efficiency perspective, rarely represents the optimal solution for mission-critical annotation projects. The most successful data annotation company also adopts a “review and approve” workflow.

Here, AI systems handle initial labeling while human experts validate and correct outputs. This hybrid approach combines the speed advantages of automated annotation with the quality assurance benefits of human oversight.

Quality Assurance Through Human Validation

Human validators bring irreplaceable value to the annotation process. They can catch subtle errors, understand contextual nuances, and identify systematic biases that automated systems might miss.

Even more, they can detect when LLMs make consistent errors across similar data types and provide feedback that improves future annotation accuracy. This validation layer is very important for high-stakes applications where annotation errors could have drastic downstream consequences.

Balancing Speed with Accuracy Through Hybrid Models

The most effective annotation workflows use LLMs for initial labeling and structure generation while reserving human expertise for complex cases, quality validation, and edge case handling. This approach maximizes throughput while maintaining the accuracy standards required for production AI systems.

Final Words

As the annotation market expands at double-digit annual growth rates, the ability to scale without compromising quality is a necessity for businesses. The proven way to label datasets at scale without letting the costs spiral up is to invest in LLM-based data annotation solutions. Blending this with the human-in-the-loop approach further ensures quality and precision in the data labeling process.

Organizations that know how to harness the strengths of LLMs and human annotators in the data annotation process will lead the race! That’s because LLMs do the heavy lifting while humans provide the necessary sight and judgment to keep AI systems fair, accurate, and adaptable. When implemented correctly, this hybrid model can unlock faster, more reliable, and more equitable AI across various industries.