From predicting trends to driving autonomous systems, AI and ML applications are making business processes more efficient than ever before. These applications require high-quality and accurately tagged data to function effectively. This tagged data lays the foundation for successful AI/ML model development, enabling them to understand data and the environment and perform the desired tasks. As companies look forward to harnessing the potential of AI and ML applications, it’s crucial to resolve the data annotation vs. data labeling debate.

Table of Contents

Data Annotation vs Data Labeling: What Really Matters for Scalable, Enterprise-Grade AI Systems?

Data Annotation vs Data Labeling: Key Differences

Though “data annotation” and “data labeling” are often used interchangeably, they aren’t the same. There are slight differences in approach, process, steps, methods, and outcomes. For instance, data annotation is a broader term, and labeling is a part of it. Stakeholders must know these differences to make strategic choices when developing AI and ML models. Let’s get started:

1. Conceptual Foundation

Data Labeling: Data labeling is a simple process focusing on classifying data. It involves adding predefined tags and categories to specific data points or complete datasets. This process usually answers the basic question: “What is this?” In a nutshell, labeling has limited context and aims to apply standard classifications across datasets.

Data Annotation: Unlike labeling, annotation is a broader approach that enriches data. In addition to classifying data, it adds layers of information, context, and metadata to training data. This process answers more detailed questions such as: “What specific features define this item?” and “How does this object connect to other elements in the dataset?” An annotation adds more depth and context to the training datasets.

2. Process Complexity and Depth

Labeling Processes: Labeling involves binary (simple yes/no) and categorical classification tasks. For example, checking if an image has a car (yes or no) or sorting customer feedback into good, bad, or neutral categories. These tasks often have straightforward right or wrong answers and don’t need much interpretation.

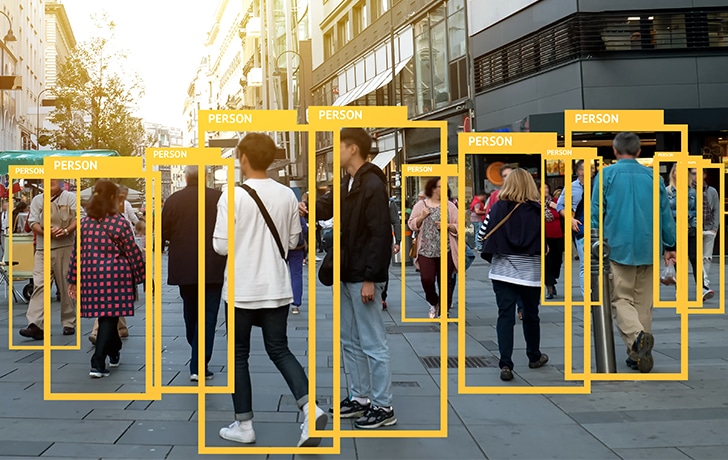

Annotation Processes: Annotation involves adding more complex information to make the training dataset comprehensive. For example, objects within an image are annotated using bounding boxes and segmentation masks. Likewise, text annotation involves tagging sentiments, entities, and relationships within the text. These tasks often require domain-specific expertise, judgment, and detailed guidelines to be executed efficiently.

3. Technical Implementation

Labeling Implementation: Simple tools are used for labeling since the process is very straightforward. Moreover, labeling tasks can also be automated through rule-based systems or basic machine learning algorithms. The results are properly structured, categorical data that aligns with the supervised learning model’s requirements.

Annotation Implementation: Annotation requires specialized tools tailored to specific data types and annotation goals. The process creates rich metadata and contextual information. It involves complex JSON objects, bounding boxes, segmentation masks, relationship graphs, and more. In short, annotation goes beyond mere “object class labels” to provide a deeper and nuanced understanding of the input.

4. Primary Applications

Labeling Applications: Labeling excels in scenarios that require simple classification, such as analyzing sentiment, categorizing content, and detecting basic objects. These tasks involve straightforward categories with little ambiguity and form the backbone of many machine learning processes.

Annotation Applications: Conversely, annotation is key for advanced AI applications, including computer vision systems that identify objects, language processing models for entity recognition, and complex AI systems that involve multiple data types. These applications require deeper context and additional information, which is sufficiently provided through the annotation process.

| Aspect | Data Labeling | Data Annotation |

|---|---|---|

| Detail Level | Categorical or binary | Provides spatial/contextual information |

| Complexity | Relatively straightforward | More complex, requires domain-specific expertise |

| Model Enablement | Accurate categorization | Granular-level understanding |

| Use Case | Sentiment analysis, basic image categorization, text classification, etc. | Outlining semantic segments, capturing nuanced attributes, drawing bounding boxes around objects |

| Example | Identifying and labeling objects within an image | Object detection, image segmentation, autonomous systems, etc. |

It is evident that annotation and labeling aren’t synonymous; there are subtle distinctions between the two processes that can impact the AI and ML model’s development and outcomes. Let’s consider the real-world example of medical imaging to better understand these differences.

Understanding the Key Difference: The Real-World Case of Medical Imaging

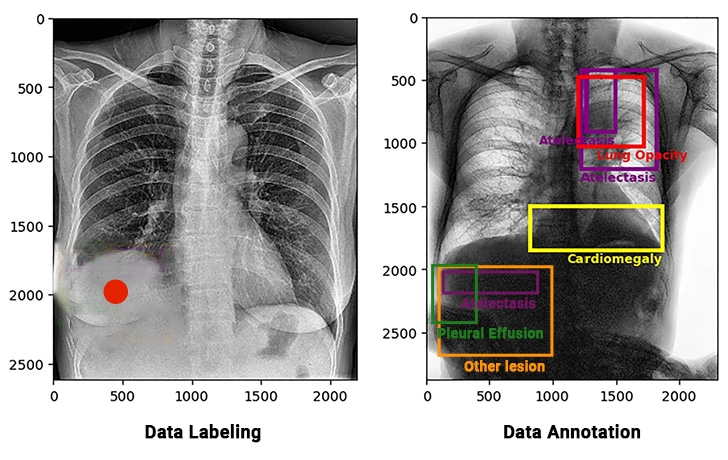

Consider a healthcare organization developing a computer vision-based medical imaging model to identify diseases and improve the accuracy of patient outcomes proactively. To train this model, the company can choose labeling or annotation:

Labeling: Labeling may tag an X-ray image as “showing pneumonia” or “not showing pneumonia”—a simple yes/no classification.

Annotation: Unlike labeling, annotation outlines the affected lung areas and highlights density patterns. Annotators with subject matter expertise in the healthcare domain also add notes on its severity and any signs of the condition getting worse. This thorough approach allows AI systems to detect pneumonia, understand its characteristics and advancements, and how it is impacting the surrounding body parts.

Data annotation is the right option here as it highlights the affected area with density patterns. The model can identify the severity of the condition, allowing for proactive intervention and treatment. Other than healthcare, the difference between labeling and annotation matters when AI-applications are being developed for highly regulated industries such as finance, manufacturing, energy and utilities, etc.

Therefore, understanding these differences is important for decision-makers evaluating data preparation plans or vendor services for their AI and ML model development project. However, there are instances when these distinctions don’t matter. For instance, in small or homogeneous projects involving labeled spreadsheets for sentiment analysis, the difference between labeling and annotation isn’t important.

“AI will be an integral part of solving the world’s biggest problems, but it must be developed in a way that reflects human values.” – Satya Nadella, CEO, Microsoft

When Does This Difference Matter? And When Doesn’t It Matter?

The difference between data annotation and data labeling has a big impact on AI development results, but this difference isn’t always important in every situation. Let’s take a detailed look at both scenarios, when the difference should be considered important, and when the terms can be used interchangeably.

When the Difference Really Matters

In scenarios involving complex AI/ML project requirements, long-term strategy implementation requiring compliance, auditability, and data quality standards, distinctions between the two processes are important. Take a detailed look at these:

- Complex AI Systems Development: For advanced AI systems such as self-driving cars, cutting-edge medical diagnosis tools, and multi-faceted recommendation engines, the difference becomes key. These systems require context and accuracy, which the annotation process aptly provides.

- Long-Term AI Strategy Implementation: Companies that plan ongoing, evolving AI projects immensely benefit from this difference. Data prepared with annotations stays useful through multiple model updates and new use cases. On the other hand, mere data labeling often needs to be replaced or reworked as needs evolve.

- Compliance, Auditability, and Data Quality Standards: In regulated industries such as healthcare and finance, the difference between annotation and labeling is critical. This is because annotations include contextual metadata required for traceability and compliance. For instance, annotated medical data ensures accuracy, consistency, and relevance in AI training. This enables precise diagnostics, treatment planning, and regulatory compliance; thereby, aligning outcomes with established healthcare quality benchmarks.

- Resource Allocation Decisions: Understanding the difference helps companies decide how to utilize their resources. Annotation involves more upfront investment for specialized tools, expert knowledge, and quality checks. Investing in data annotation services for simple labeling often leads to costly rework and project delays.

When the Difference Isn’t as Important

Differences aren’t important in scenarios where organizations are experimenting and building initial-stage proof-of-concepts or have simple classification requirements. In addition to these, there are different conditions as well. Take a look:

- Early-Stage Proofs of Concept: Simple labels prove sufficient for prototyping and quick experimentation. These allow teams to validate ideas before committing to more in-depth tagging and investing more resources in the project.

- Simple Classification Tasks: For basic classification with well-defined categories, advanced annotation might not be worth the cost. In instances such as spam detection or basic data classification, data labeling is the right option as simple yes or no answers suffice.

- Transfer Learning Applications: Organizations using pre-trained models requiring only minor tuning can achieve optimal performance with labeled data.

- Budget-Constrained Scenarios: Companies with limited budgets can invest in data labeling services initially. However, the stakeholders should plan for future enrichment as the business expands and needs evolve.

| Context | Difference Matters? | Why? |

|---|---|---|

| Enterprise-grade, scalable AI development | Yes | Requires precise annotation strategies and tools |

| Tool and workflow design | Yes | Specific tasks determine platform and integration choices |

| Compliance and regulatory requirements | Yes | Annotation includes traceability and metadata |

| Small-scale or basic ML projects | No | Simple labeling tasks serve the purpose |

To sum up, the differences don’t matter in scenarios where project requirements are small or basic, whereas these really matter when developing complex AI systems. This takes us to the next part: developing scalable, enterprise-grade AI systems.

Data annotation and/or tagging is just one aspect of the ML development lifecycle. Constant streams of high-quality and accurate data are fed into the algorithms to help them understand the environment. Therefore, organizations must have appropriate resources, including scalable infrastructure to accommodate growing volume of data and access to domain-specific expertise to prepare accurate training datasets. In short, businesses must evaluate their data preparedness, as it is an important part of the ML development lifecycle and directly impacts the reliability and effectiveness of the models. So, let’s understand the important factors to consider when developing enterprise-grade AI systems.

Key Factors to Consider for Scalable, Enterprise-Grade AI Systems

When developing high-performing, enterprise-grade AI systems, ensuring data quality and consistency is important as input directly impacts the outcome. Moreover, there are various ethical and bias considerations that organizations must take care of when building AI systems. In addition to data quality and ethical considerations, there are various other factors that stakeholders must thoroughly evaluate. Let’s get started:

1. Ensuring Data Quality and Consistency

Comprehensive Quality Metrics: Enterprise AI systems need metrics that go beyond accuracy. These should also assess consistency, completeness, edge-case handling, and representation across different data types.

Standardized Guidelines and Protocols: Leading companies document annotation guidelines with clear definitions, edge-case handling steps, and decision trees. These valuable assets lead the way forward for annotators and help them align the model’s outcomes with the business objectives.

Inter-Annotator Agreement Processes: Annotators might have different perspectives regarding certain ambiguous situations. This is where inter-annotator agreement plays a key role in measuring and improving consistency. It includes multi-level reviews, consensus methods, and regular team assessment sessions.

Quality Assurance Automation: Organizations can use automation to identify statistical outliers, recognize error-prone patterns, and compare outputs with gold-standard datasets. This helps ensure accuracy, even if human-in-the-loop misses out on anything.

2. Scalability and Operational Efficiency

Workflow Optimization: Data annotation and labeling is the art of balancing quality and quantity. Compromising either of the two negatively impacts the model’s outcome. Implementing quality assurance processes and feedback loops helps increase throughput while maintaining precision.

Technology Infrastructure: The right technology structure is important for developing a scalable, enterprise-grade AI solution. Thus, businesses should use annotation platforms that support high workloads, version control, and collaboration.

Hybrid Human-Machine Approaches: A hybrid model combines the scalability of automated labeling tools with the accuracy of human-in-the-loop approach. Here, automated tools pre-label data and humans refine and validate the results, balancing scale and accuracy.

3. Domain-Specific Expertise

Subject Matter Expertise: In industries like healthcare and finance, subject matter expertise plays a key role. Involving industry experts in guideline design, edge-case review, and specialized annotation tasks helps ensure the accuracy of such critical tasks. Additionally, mix domain experts with annotation specialists to ensure context and consistency are addressed.

Tools Made for Specific Fields: There are tools designed for business-specific domains, such as vocabulary and validation logic. For instance, tools like Doccano and NLP Annotation Lab are designed specifically for text annotation, whereas LabelMe is particularly good for segmentation tasks. Using these tools enables businesses to prepare high-quality training datasets at scale.

Continuous Improvement: Continuous improvement is the best way to generalize the outcome and improve the accuracy of the AI/ML models. Enable collaboration between annotators and model builders to align data preparation with model needs.

4. Ethical and Bias Considerations

Representation and Fairness Protocols: To ensure AI/ML models provide unbiased results, include as wide a variety of data as possible. Ensure geographic, demographic, and contextual diversity in datasets. Furthermore, audits, blind testing, and bias-specific analysis tools help detect and reduce annotation bias.

Representation and Fairness Protocols: To ensure AI/ML models provide unbiased results, include as wide a variety of data as possible. Ensure geographic, demographic, and contextual diversity in datasets. Furthermore, audits, blind testing, and bias-specific analysis tools help detect and reduce annotation bias.

Ethical Guidelines Integration: Instead of treating ethics and considerations as an afterthought, prioritize them. Incorporating annotation ethics directly into annotation workflows helps prevent costly error corrections and lawsuits.

Perform an ROI Analysis of Data Annotation for AI Models

ML-Readiness: The True Measure of Data Preparation Success

Moving beyond terminology to practical value, businesses can use labeling and annotation interchangeably when preparing data. Ultimately, it all comes down to how ready an organization is to prepare data and develop ML algorithms.

- Performance-Driven Metrics: While understanding the distinction between annotation and labeling provides conceptual clarity, forward-thinking organizations focus more on concrete ML-readiness metrics. These include prediction accuracy, edge-case handling capacity, generalization to new data, and robustness against adversarial examples.

- Fit-for-Purpose Assessment: The ultimate measure of data preparation quality is whether it enables AI-models to accomplish their intended business objectives. This assessment should examine both technical performance and alignment with organizational objectives rather than focusing on methodology terminology.

- Continuous Validation Processes: Leading organizations implement continuous validation mechanisms that evaluate data preparation effectiveness against evolving model requirements and business objectives, allowing real-time adjustment of strategies regardless of whether they’re formally classified as annotation or labeling.

- ROI-Centered Approach: Rather than debating terminology, successful organizations analyze the return on investment from different data preparation approaches, measuring factors like time-to-value, model performance improvement per resource unit invested, and performance sustainability over time.

Closing Thoughts

While the distinction between data annotation and data labeling provides important conceptual clarity, what truly matters for enterprise AI success is the quality, scalability, and ML-readiness of the training data, regardless of terminology. Organizations that focus obsessively on data quality frameworks, operational scalability, and fit-for-purpose consistently outperform those caught in definitional debates.

For enterprises serious about building sustainable AI advantage, selecting the right data preparation partner is one of the most important decisions in their AI journey. When evaluating potential partners, prioritize those who demonstrate:

- Proven quality management systems with transparent metrics

- Scalable infrastructure capable of growing with the company’s AI ambitions

- Flexible approaches balancing cost with quality

- Strong ethical frameworks addressing bias and representation

After all, the data annotation vs data labeling debate doesn’t really matter once the enterprise AI implementations are successful. But what is important is creating training data that enables the models to deliver genuine business value at scale.