From banking and manufacturing to healthcare and law enforcement, artificial intelligence is transforming decision-making across industries. However, this transformation is not without risks. Among the most pressing concerns is AI bias, an issue where automated decisions reinforce social, racial, gender-based, and economic disparities due to flawed and incomplete data. Enter data append: the ultimate hack to avoid biases and safeguard AI fairness!

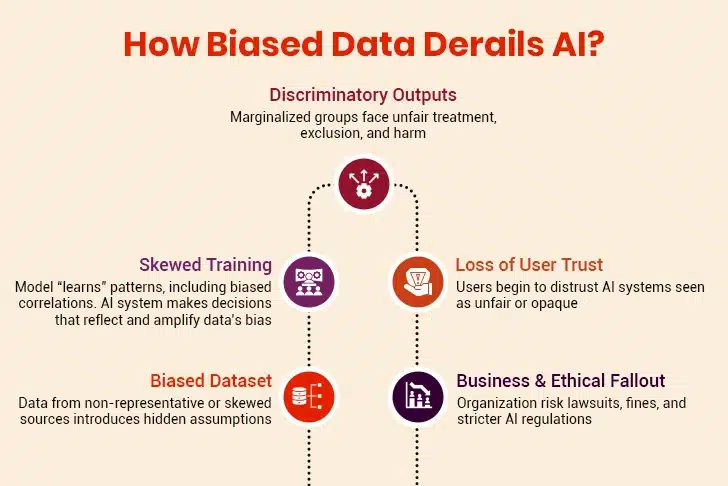

AI bias occurs when training data fails to accurately represent the diverse population it aims to serve or when algorithms exacerbate historical inequalities embedded within the data. The Workday litigation case exemplifies these challenges, where the company’s AI-powered applicant screening software discriminates against older, black, and disabled job applicants.

In another high-profile case, Amazon scrapped an AI recruiting tool that showed bias against females. The system, trained on resumes submitted over 10 years, downgraded resumes that included the word “women’s,” such as “women’s chess club captain.” Such AI systems face regulatory scrutiny, with penalties ranging from $375–$1,500 for each violation under emerging bias audit requirements.

Table of Contents

Is Demographic Accuracy Powered by Data Appending the Hidden Key?

What Is Reliable Data Appending All About?

- Best Practices for Accurate and Ethical Data Appending

- Vendor Due Diligence: Key Questions the C-Suite Must Ask

How to Safeguard AI Fairness with Data Governance and Oversight?

As per the latest research, 68% of AI implementation failures occur due to data quality issues. Not only this, but a staggering 43% of deployed systems exhibit significant algorithmic bias. This is a serious concern as biased algorithms widen the existing social and societal divide.

At the same time, mitigating bad biases isn’t the sole responsibility of technical teams. As AI systems become embedded in core business functions, accountability squarely falls on the shoulders of the C-suite. CXOs are under immense pressure to ensure the fairness, transparency, and regulatory compliance of AI initiatives. This implies that ethical AI is a strategic imperative.

Thus, enter data appending, particularly demographic data appending, that enhances and enriches datasets by filling in missing or outdated attributes. This improves business insights and fortifies AI models against the biases introduced by incomplete or incorrect data. When executed ethically and with precision, data appending acts as a foundational control mechanism to safeguard AI fairness at scale.

Is Demographic Accuracy Powered by Data Appending the Hidden Key?

The quality of data fed into ML algorithms is often underestimated in corporate AI strategies. Most AI-related conversations at the executive level focus on model performance, computational power, and cost efficiency. Yet the most critical input, data, is often left unchecked. This is where demographic data appending comes as a hidden lever to drive equity in AI outcomes.

Data appending enhances an existing database by adding accurate, verified information from external sources. For customer-facing systems, this could mean updating demographic fields, such as age, income level, education, household size, and ethnicity. For enterprise systems, it might include firmographics such as industry classification, company size, and purchase history.

When demographic data is appended correctly, it ensures that AI-based decision-making systems operate on updated and representative information. Unfortunately, that is often not the case, and here is why it matters:

1. Outdated Demographic Data Leads to Skewed Models

Most CRM systems usually have outdated data. People move, change jobs, switch financial brackets, or experience major life events. Amid all this, databases fail to keep pace. Feeding obsolete data into an AI model results in unreliable outcomes. For instance, a credit scoring model trained on outdated income data could unjustly deny loans or improperly underwrite risk.

Consider another case where a model assumes that a certain ZIP code equates to lower income due to ten-year-old census data. Given this understanding, the model might systematically deprioritize residents from that area, even if recent demographic shifts have occurred. Such systemic biases emerge not because of algorithm design but due to outdated foundational data.

2. Unvalidated Third-Party Data Introduces Structural Bias

Businesses often acquire third-party datasets to enhance existing records, but not all sources are created equal. Inaccurate or unverified external data can introduce new forms of bias or reinforce existing ones. If the external data source fails to capture minority demographics accurately, it skews predictive models in ways that disproportionately harm underrepresented groups.

For instance, if ethnicity or gender is represented using proxy variables, such as names or browsing behavior, it can embed stereotype-driven assumptions into AI logic. Even worse, these assumptions become structural flaws when scaled across thousands of users.

3. Data Gaps Disproportionately Affect Marginal Groups

When datasets lack representation for marginalized communities, algorithms learn to ignore them. This phenomenon is called “data deserts.” These gaps are not random. They usually correlate with socioeconomically disadvantaged populations, creating a vicious cycle where underserved groups remain invisible to systems that shape financial, healthcare, and employment outcomes.

Incorporating high-quality demographic data append services ensures that minority groups are fairly represented, thereby reducing the likelihood of algorithmic bias. When diverse data becomes part of the training, AI outcomes become more equitable, inclusive, and accurate.

“AI is good at describing the world as it is today with all of its biases, but it does not know how the world should be.”

— Joanne Chen, General Partner, Foundation Capital

What Is Reliable Data Appending All About?

Much more than data acquisition, reliable data appending is a strategic process guided by transparency, ethics, and accuracy. The C-suite must view this not as a back-end task but as a critical pillar of enterprise AI integrity. Following a few tried-and-tested best practices can help guide this initiative.

Best Practices for Accurate and Ethical Data Appending

- 1. Use Verified, Consent-Based Sources – First and foremost, organizations must ensure that appended data is derived from verified, opt-in, consent-based sources. Additionally, sources should comply with privacy regulations, such as the GDPR, CCPA, and HIPAA. Ethical sourcing is essential to protect a brand’s reputation and ensure user trust in AI-driven decisions.

- 2. Prioritize Data Freshness – There is no second thought that data decays more quickly than anything else. That said, frequent data updates are important. Append services should ensure that demographic updates are no older than a defined threshold. These should ideally be within the last six to 12 months to avoid time-decay bias.

- 3. Apply Contextual Relevance Filters – Not all data points are relevant to every AI use case. Ensure that appending aligns with specific business contexts, such as predictive analytics, segmentation, personalization, and more. This helps avoid noise introduction.

- 4. Perform Data Reconciliation – Even though data appending enhances and enriches data, it shouldn’t be blindly merged with the master database without verification. It should be reconciled with existing datasets to identify conflicts, duplicates, and outliers. Importantly, stakeholders can use data lineage tracking to maintain transparency regarding the origin of each attribute.

These are some of the best practices for accurate and ethical data appending. In addition, stakeholders must conduct vendor due diligence to find a reliable data appending partner. After all, this partner helps them enhance the quality of their datasets, which lays the foundation for robust AI models.

“Organizations must prioritize data integrity and vendor transparency to mitigate AI bias effectively.”

– Dr. Cathy O’Neil, Author, Weapons of Math Destruction

Vendor Due Diligence: Key Questions the C-Suite Must Ask

Executives should use the risk lens to assess data appending vendors rather than merely cost or speed. They should ask questions to find the most reliable partner who meets organizational requirements and aligns the outcomes with business-specific goals. Here’s what to ask:

- What is the source of appended data?

- How does the vendor validate demographic accuracy?

- What is its update frequency?

- Are their methods compliant with current privacy regulations?

- How do they manage demographic data gaps or underrepresented populations?

These questions form the basis for vendor selection frameworks that align with enterprise values, legal standards, and ethical AI principles. With this comes the next step: integrating a governance framework and data appending to safeguard AI fairness.

Role of Data Appending in Retail Industry

How to Safeguard AI Fairness with Data Governance and Oversight?

Data appending plays a key role in driving AI fairness. Without formal governance structures, even the best data append strategies fail to prevent bias. Governance ensures that technical practices translate into ethical outcomes.

Risk, compliance, and governance are concerning issues for the majority of organizations when deploying AI models. Therefore, a staggering 57% of organizations opt for a centralized risk and governance model while 46% opt for AI governance.

On that note, it is right to say that AI fairness is not the sole responsibility of individual data scientists and must be institutionalized. This starts with connecting the dots between data management and ethical oversight. Here’s how to do so:

I. Embedding Data Appending Into AI Governance Frameworks

The governance framework must include guidelines for when, how, and why demographic data appending operations are performed. This includes pre-processing controls (before AI training), in-processing checks (during modeling), and post-processing audits (after deployment).

For example, every AI project should include a “data readiness” checklist that ensures data completeness, demographic balance, and appending accuracy before model training begins.

II. Establishing a Cross-Functional AI Ethics Board

An AI ethics board is a crucial body involving stakeholders from various departments, including compliance, legal, IT, data science, and HR. Their job is to evaluate data sources, audit data append service providers, assess risk exposure, and recommend corrective actions.

The board should meet quarterly to review AI project pipelines and oversee the use of appended data across the organization. Importantly, they act as a buffer between rapid innovation and long-term responsibility.

III. Instituting Appended Data Audits and Bias Testing

Routine audits of appended datasets are essential. This helps maintain data quality, ensure compliance, and improve decision-making. These audits also help identify errors, inconsistencies, and redundancies, resulting in more accurate and reliable data. These reviews should assess:

- Representation balanced across gender, race, geography, and income levels

- Accuracy against set ground truth benchmarks

- Bias propagation through modeling outcomes

When coupled with automated bias testing tools, such audits create a closed feedback loop. This iterative process can help continuously refine data inputs and AI outputs.

IV. Embedding Regulatory Compliance

Fair AI also means compliant AI. Regulations such as the EU AI Act, the U.S. Algorithmic Accountability Act, and India’s DPDP Act encourage organizations to adopt formal accountability measures. Demographic data append activities should comply with local and global rules regarding user consent, data sovereignty, and non-discrimination.

Failure to align with these regulations exposes companies to legal liabilities, reputational damage, and customer churn. A robust compliance framework that includes audit trails of data appending activities helps mitigate these risks while reinforcing ethical standards.

Turn B2B Database Into an Insight Powerhouse with Appending

Wrapping Up

In the race to scale AI capabilities, organizations must understand that the fairness of outputs depends squarely on the integrity of inputs. AI bias begins not in machine learning algorithms but in the flawed data they consume. Businesses can embed fairness into the foundation of their AI strategies by prioritizing accurate and ethical data appending. That said, there’s no better way than investing in professional demographic data append services.

Asking the right questions, institutionalizing governance, and holding data-appending partners accountable is all that must be done. After all, bias-free AI is not just a technological achievement, but a moral and strategic one as well. And as we enter an era where decisions made by machines hold real consequences for people, the call for responsible data practices has never been louder.