In this blog, we will clarify the roles of data engineering and data science. We will see why their partnership is so important. We will also discuss best practices, real-world examples, challenges, and future trends. By the end, you will know how to build a strong foundation for advanced data projects. You will also see how this approach sparks innovation.

In this blog, we will clarify the roles of data engineering and data science. We will see why their partnership is so important. We will also discuss best practices, real-world examples, challenges, and future trends. By the end, you will know how to build a strong foundation for advanced data projects. You will also see how this approach sparks innovation.

Table of Contents

Best Practices for Integration

Common Challenges and How to Overcome Them

Future Trends in Data Engineering and Data Science

Understanding the Roles

Data Engineering

Data engineering is about building the pipelines that move raw data from varied sources into usable systems. It also involves storing, transforming, and ensuring data quality. Experts in data engineering consultancy often use Python, SQL, or Spark. They might work with AWS, Azure, or Google Cloud to handle large data sets. Their job is to keep data reliable and in the right format. Data engineering services focus on tasks like ETL (Extract, Transform, Load) and real-time data processing. They gather records from APIs or sensors, store them in warehouses or lakes, and monitor the data flow. They also watch for errors or duplicate information. If the data is disorganized, it can cause big headaches for the rest of the team.Data Science

Data science revolves around extracting insights from data. Scientists here apply stats, machine learning, and AI methods to spot trends. They might build models to predict sales or find out which customers are likely to churn. They rely on tools like Python or R, as well as libraries like pandas, scikit-learn, or TensorFlow. The tasks include analyzing data, crafting predictive models, and creating AI applications. A data scientist may start with historical info, such as past transactions. Then they run algorithms to forecast what might happen next. For instance, they can estimate future demand or detect anomalies in real time. Strong data engineering in the background lets data scientists trust that the data is correct.Why Collaboration Matters

1. Data Pipelines and Preparation

A data scientist’s job is much smoother if the raw information arrives in a clean format. Otherwise, they might waste time fixing issues. That is where data engineering consultancy helps. They design the workflows that gather, transform, and load data. By the time it reaches the data scientist, it is standardized. This integration speeds up analysis.2. Real-Time Data Processing

Many industries need instant insights. A streaming pipeline can alert an ecommerce app about a customer’s browsing in seconds. The data scientist’s machine learning model can then suggest products on the spot. This only works if data engineering services build a robust, low-latency pipeline. Without real-time infrastructure, the model can miss the moment when a recommendation matters most.3. Feature Engineering

Machine learning relies on strong features. Data engineers help produce these features by merging sets, cleaning columns, or creating new variables. Then data scientists use them to train accurate models. Close teamwork here avoids confusion. If the engineer changes a column name, the scientist should know. If the scientist needs more detail, the engineer can revise the pipeline. This back-and-forth leads to better results.Best Practices for Integration

I. Data Quality and Consistency

Good analytics demand top-notch data quality. If data is missing fields or mislabeled, predictions can fail. The best approach is to define rules and checks. For example, you can reject any record missing a key ID. You can also store dates in a single format. This consistency helps data scientists avoid guesswork.II. Modular and Scalable Architectures

Business needs evolve. A small pilot project can grow into a massive global rollout. If your system is modular, you can swap pieces without rebuilding everything. You might start with a local database, then shift to a data lake later. On the data science side, you might begin with basic regression models, then jump to deep learning. Flexible design keeps you future-ready.III. Collaborative Tools and Workflows

Modern teams often adopt tools like Jupyter Notebooks or Apache Airflow. They also might store code in Git repositories. This fosters transparency. The data engineering side can show how they set up pipelines, while data scientists can commit their model scripts. Everyone sees changes in real time. They can also track dependencies or revert if problems arise.Real-World Use Cases

1. Predictive Maintenance in Manufacturing

Manufacturers often collect data from sensors on equipment. This data can include temperature, pressure, and usage patterns. Data engineering services build pipelines that process these readings and store them in a centralized location. Data scientists then study the signals to find trends that hint at machine failures. By catching warning signs early, factories can do repairs before breakdowns happen. This lowers downtime, saves money, and improves safety. In many cases, the collaboration between engineering and science also leads to real-time dashboards that alert line managers about potential issues.2. Customer Personalization in Ecommerce

Online retailers record clicks, purchases, and browsing habits. Data engineering consultancy teams funnel these records into a data warehouse, ensuring consistency. Data scientists use the clean info to build recommendation engines. These models can suggest items based on user interests and past behavior. The result is a more personalized shopping experience. Customers see products they are likely to want, which lifts sales and boosts satisfaction. With a real-time pipeline, suggestions can appear instantly, reflecting the shopper’s latest clicks.3. Fraud Detection in Finance

Banks watch for suspicious transactions to curb fraud. Data engineers unify logs from ATMs, mobile apps, and card networks. Data scientists apply anomaly detection techniques, spotting unusual account activity. If something flags as risky—like a credit card used in two distant locations within minutes—the system can block it or require an extra step. This synergy makes financial operations more secure. Banks lose less to fraud, and customers feel safer. Engineers handle the data flow, while scientists craft the machine learning logic that sorts normal behavior from suspicious activity.4. Expanding on Practical Insights

Beyond these examples, many other industries find value in bridging data engineering and data science. A healthcare group might merge patient records with wearable device data to improve diagnostics. A logistics firm could combine GPS tracking with route optimization to lower shipping times. The key remains the same: strong pipelines plus smart models.Ready to Future-Proof Your Business with Scalable Data Pipelines?

Common Challenges and How to Overcome Them

Lack of Communication

If data engineers and data scientists barely talk, pipelines may not match project needs. Scientists might request a certain format, only to find it overlooked. Engineers might rename columns without telling the scientists. The solution is regular meetings, open chat channels, and a culture of shared feedback.Data Silos

Sometimes each department keeps its own data in separate systems. Marketing data sits in one platform, while operations data lives elsewhere. Data scientists can waste hours merging these sets. A unified data lake or warehouse, with proper access controls, can fix this. Everyone draws from the same source, cutting back on chaos.Different Goals

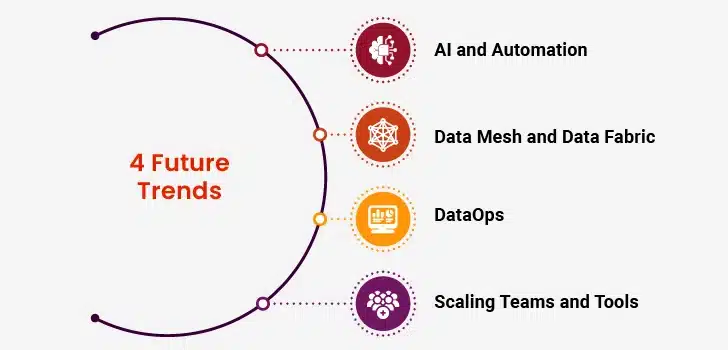

Engineers focus on performance, reliability, and scaling. Scientists often want flexible data access to run new algorithms or test unique hypotheses. The best practice is to define shared objectives. If you want to deploy a predictive model for customers, the pipeline must be stable enough to feed its live data. Both sides adapt to meet the same end goal.Future Trends in Data Engineering and Data Science

AI and Automation

Automation is a hot topic. Tools can spot errors or auto-generate data transformations. On the science side, AutoML solutions suggest models with minimal tuning. As teams embrace these technologies, they can manage bigger workloads. Engineers focus on complex architecture, and scientists pour energy into advanced research.Data Mesh and Data Fabric

These new architectures seek to reduce data bottlenecks. A data mesh approach has each domain team own its data. A data fabric weaves together different data sources under a single framework. Both aim to make data more accessible and consistent. They also encourage cross-team efforts, letting engineers and scientists collaborate without friction.DataOps

DataOps applies DevOps methods to data. It means versioning pipelines, running tests, and integrating continuously. If a new pipeline update threatens to break a model, the system flags it right away. Everyone can fix it before it affects production. DataOps fosters a culture of shared responsibility between data engineering and data science.Scaling Teams and Tools

As data grows, so does the need for specialized skills. Some firms hire “machine learning engineers” who blend these roles. Others opt for separate squads but keep them tightly connected. They might also rely on data engineering as a service or hire short-term data analytics engineering services. Larger transformations can call for data engineering consulting services to revamp architecture or address compliance.Broader Considerations

Governance and Compliance

Privacy laws require careful data handling. Data engineers must enforce encryption or masking of personal details. Data scientists must respect user rights when building models. Without strong governance, a firm could face fines or public backlash.Cloud Computing

The cloud makes it easier to store, process, and share large volumes of data. It also simplifies collaboration. A data engineering team in one city and a data science team in another can access the same environment. Cloud-based services scale on demand, helping you handle sudden spikes in usage.Data Literacy

Not everyone needs to be an engineer or scientist. But a basic understanding of data helps the whole organization. People can run simple reports or interpret analytics dashboards. This reduces confusion and streamlines requests, because staff know how data is gathered and used.Convert Your Data into Actionable Business Insights?