Business adoption of AI is growing at a rapid pace. This boom, however, comes with many invisible vulnerabilities. AI tools can expose users’ sensitive data through unintended leakage. Hackers can exploit AI plugins to steal credentials and compromise systems.

Governments worldwide require enterprises to follow regulations for safe AI use. These laws focus heavily on data privacy. Failure to conform can lead to substantial financial penalties. Concurrently, present-day consumers expect organizations to develop AI ethically and responsibly. Building trustworthy AI is thus becoming a key expectation.

Security presents another major challenge. AI systems face unique vulnerabilities, apart from traditional cybersecurity risks. Consequently, companies need to embed security measures into every stage of their AI development lifecycle. These measures protect artificial intelligence systems from unauthorized access and tampering.

This blog talks about essential security and compliance considerations for enterprises deploying AI systems. It also explains regulatory frameworks and governance structures that businesses must pay attention to.

Table of Contents

- Why Should Enterprises Worry? Top AI Threats

- Which Principles Help Mitigate AI Risks

- Pre-Development Stage: How Do We Secure Data in AI Pipelines?

- Development Stage: What Does Secure Model Development Look Like?

- Post Development Stage: How to Protect AI Systems in Production?

- Which Compliance Frameworks Matter the Most?

- How to Develop a Culture of AI Governance

- Future Trends and Preparation

- The Final Word

Why Should Enterprises Worry? Top AI Threats

AI systems create complex security challenges that businesses must fix. Companies investing heavily in enterprise AI face a growing number of threats that target different parts of their AI systems.

Data Threats

Malicious actors can poison AI systems by tampering with training data. The attacks mess with the outputs of these systems and degrade their performance. Small changes can have devastating effects, given that poisoning just 1-3% of data can hurt an AI’s prediction accuracy.

Data leakage is another serious problem. There is a high chance of sensitive data getting exposed during training or when the model makes predictions. This creates problems for enterprise AI services that handle massive quantities of private data.

Model Threats

Model theft poses a threat to intellectual property. Researchers may steal the parameters of an AI model and recreate it. This illicit duplicate then becomes a rival overnight. Building these models requires expensive computing power and expert knowledge, and this makes them a prime target for competitors.

Evasion attacks trick AI systems by tweaking input data. Small alterations can cause major errors. To cite an example, minor marks on a road might make a self-driving car swerve dangerously, leading to mishaps.

Operational Threats

Prompt injections affect large language models. These intrusions require little technical knowledge. Hackers can command an LLM using simple English to steal data, run malicious code, or spread false information.

The AI supply chain also opens up vulnerabilities. Many ransomware groups target software supply chains through infected code in open-source platforms like Hugging Face and GitHub. Hundreds of model context protocol servers with weak security settings could let attackers run any command they want.

Societal Threats

AI systems not only inherit human biases but amplify them, creating dangerous feedback loops. Findings of a recent research paper have revealed that people working with biased AI started to undervalue women’s work and overestimate white men’s chances of having high-level jobs. Deepfakes also pose growing risks.

Enterprises must systematically address these security issues throughout the AI development lifecycle. Improper security measures may cause them to lose money as well as stakeholder trust. This makes robust security frameworks crucial for responsible enterprise AI development.

Why AI Development Demands Executive Vision

Which Principles Help Mitigate AI Risks

“The goal of security is not zero risk. It’s managed risk.”

– Malcolm Harkins, Former Chief Security & Privacy Officer, Intel

Alleviating AI risks requires principles that guide development from start to finish. These principles help create frameworks that protect both organizations and their stakeholders.

Security By Design

“Secure by Design” makes security a core business need rather than just a technical feature. Companies that build AI systems must put security first throughout their product’s life.

Their security measures must grow with AI, creating systems that remain resilient against new threats. These measures should cover supply chain security, documentation, and technical debt management.

Privacy By Default

Privacy by default necessitates that organizations implement the strictest privacy settings automatically. This philosophy ensures that only data that’s necessary to achieve a specified objective gets processed.

Setting up privacy by default includes many measures:

- Enabling opt-in consent mechanisms by default (unchecked consent boxes)

- Setting proportionate data retention periods

- Automatically deleting or anonymizing personal data after use

- Avoiding deceptive dark patterns when obtaining user consent

Transparency and Explainability

Transparency means being open about the use and operation of an AI system. It involves clearly telling users when they are interacting with AI. It also includes sharing basic information about how the system works and what data it uses.

Explainability helps users understand individual AI decisions. It provides reasons for specific outcomes in a way that they can grasp. This builds trust and meets regulations that require clear explanations for AI decisions, especially in high-risk situations.

Accountability

Accountability matters because AI affects real people and their decisions. We must know who is responsible for what the AI does. Without a mechanism to assign liability during adverse outcomes, trust erodes, and risks proliferate.

Organizations must put accountability into practice. They should:

- Assign clear owners for each phase: developing, deploying, and monitoring the AI.

- Conduct regular operational audits against fixed ethical and legal rules.

- Create clear paths for affected people to seek recourse if the AI causes damage.

These steps take responsibility, from an idea into action. They align technical operations with societal expectations.

| Principle | Objective | Implementation Measures |

|---|---|---|

| Security By Design | Embed security as an essential business requirement |

|

| Privacy By Default | Implement the strictest privacy settings automatically. |

|

| Transparency and Explainability | Be open about the use of AI. Help users understand its decisions. |

|

| Accountability | Assign clear responsibility for AI outcomes. Enable recourse. |

|

Pre-Development Stage: How Do We Secure Data in AI Pipelines?

Data protection throughout the AI lifecycle helps maintain integrity. AI pipelines need reliable technical measures and operational protocols to keep the data secure.

Encryption

Organizations use encryption as the primary defense for their data sets. Data should be encrypted at rest, in transit, and while it is being processed. Homomorphic encryption lets companies compute encrypted data without decryption. This method reduces security risks by removing the need to decrypt sensitive information during analysis.

RBAC and ABAC mechanisms give specific permissions that limit data exposure. These systems ensure that only authorized staff can access datasets based on preset rules and attributes. To cite one example, researchers can access anonymized data while data engineers work with raw data.

Compliance Alignment

AI development must handle complex regulations, especially when dealing with consent management. GDPR requires companies to get clear, informed consent before using personal data in AI training. Companies need consent systems that help people understand how AI systems will use their data.

Healthcare AI applications must meet strict HIPAA requirements. These applications need technical safeguards like unique user IDs, emergency access procedures, and automatic logoff features. The system’s audit controls track all data access and changes made.

Threat Mitigation

AI development companies use statistical anomaly detection to spot if training data has been corrupted. Many use adversarial testing to expose flaws in data that might go unnoticed otherwise.

Bias detection tools look at both training data and model outputs for demographic differences. IBM’s AI Fairness 360 provides open-source algorithms to calculate bias across demographic groups. These tools can identify problematic patterns in data that might increase societal prejudices.

Modern AI development includes data validation pipelines. These pipelines scan the data for anomalies and inconsistencies that can be propagated downstream. These checks work as ongoing quality control throughout the AI lifecycle. They flag potential issues before they affect production systems.

Development Stage: What Does Secure Model Development Look Like?

Enterprises need systematic ways to test, spot, and reduce possible flaws in AI systems. Building resilient AI requires strict security practices throughout development.

Threat Modeling for AI

Threat modeling for AI systems requires more than standard software security approaches. Organizations should use a well-laid-out framework that identifies typical software flaws as well as AI-specific vulnerabilities.

A thorough threat modeling process must look at new attack methods. Teams should assume that the training data could be compromised. They should set up methods to spot harmful data that enters their systems.

This modeling must cover every part of the AI system. It includes the entire AI development and operation pipeline, and data storage locations.

Secure Coding Practices

Security must be embedded into AI model development from the beginning. The Secure Software Development Framework (SSDF) outlines security practices that also apply to AI development.

Secure coding practices for AI include:

- Secure code storage for AI models, weights, and pipelines

- Data validation to prevent manipulation of AI systems

- Tracking data origins to ensure trustworthiness

- Clear input rules with strong validation checks

Adversarial Robustness

Testing AI systems against adversarial attacks matters too. These attacks exploit weaknesses that make AI systems behave aberrantly. One serious threat is model inversion. Attackers can use this technique to reverse-engineer an AI model. By doing so, they extract sensitive information from the original training data.

Evasion attacks pose another big risk. Protection against such attacks needs methods like adversarial training. The process exposes the model to harmful inputs designed to misguide it. This way, the model learns to resist similar manipulations later.

Compliance in Training

Good documentation helps with security and compliance. AI documentation should record all the development work, design choices, data sources, and training methods. These records help comply with global laws.

Organizations must keep detailed records of model training for compliance. This means documenting data sources, model architecture, and development decisions. Regular risk assessments should check for data privacy issues and security weaknesses.

Post Development Stage: How to Protect AI Systems in Production?

AI systems need protection after deployment. This critical phase brings unique challenges as models interact with real-world data and unpredictable users.

Infrastructure Hardening

The backbone of AI infrastructure protection lies in securing the CI/CD pipeline. Organizations should implement strict access controls based on the principle of least privilege. People and systems should only get the access permissions they absolutely need. This controls pipeline access and limits damage if one part gets compromised. Sensitive information like keys must be encrypted and changed regularly.

AI workloads often run in containers, needing specialized security. Runtime security tools actively watch and guard applications during execution. A web application firewall filters incoming web traffic. It blocks common attacks before they reach the AI application.

Runtime application self-protection sits inside the application and monitors its behavior in real time to detect and stop unusual activity.

Runtime Monitoring

Automated drift detection is critical to AI governance. AI models can degrade over time if the data they process starts to deviate from the data they were trained on. Time-based analysis helps compare production and training data to determine if the drift happened gradually or suddenly.

Anomaly monitoring helps identify:

- Data poisoning attempts through suspicious patterns in inputs

- Unexpected deviations in model behavior suggesting adversarial attacks

- Unusual API calls indicating exploitation attempts

Audit Trails

Enterprises must maintain meticulous audit logs that chronicle user interactions and administrative activities. Each log entry should record who interacted with the system, what action they performed, when it happened, where it originated, and which resources were involved. These audit trails are needed as evidence during regulatory examinations and internal reviews to demonstrate conformity with strict AI regulations.

Which Compliance Frameworks Matter the Most?

Organizations need to deal with complex compliance frameworks when implementing AI technologies. A proper understanding of applicable regulations helps improve governance and reduce legal risks.

Global Regulations: GDPR and EU AI Act

GDPR has a substantial effect on AI systems that process EU citizens’ data. Article 22 gives individuals the right to avoid decisions made only by automated processing if those decisions have significant legal consequences.

The EU AI Act provides a complete risk-based framework with four categories: unacceptable, high, limited, and minimal risk. High-risk AI systems must meet extensive requirements, including:

- Risk assessment and mitigation systems

- High-quality datasets to reduce discriminatory outcomes

- Detailed documentation and traceability measures

- Appropriate human oversight

Industry Standards: NIST AI RMF, ISO 42001

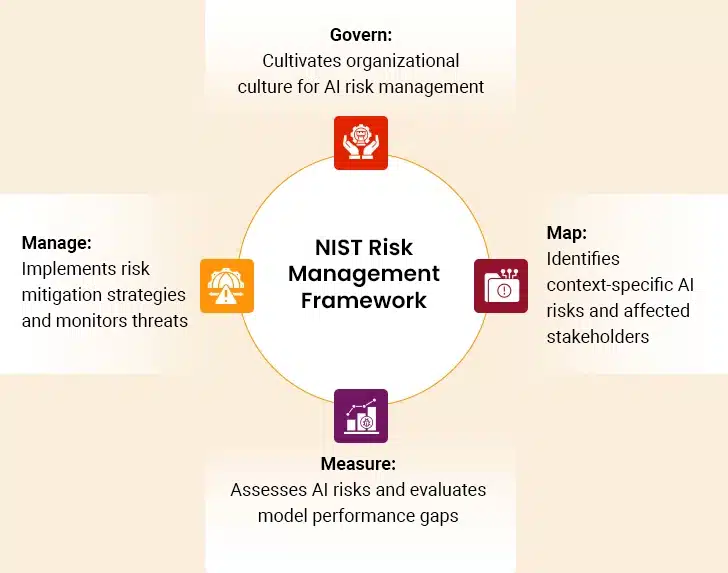

NIST AI Risk Management Framework (AI RMF), released in January 2023, provides voluntary guidelines through four core functions: Govern, Map, Measure, and Manage. This framework helps organizations manage risks associated with artificial intelligence.

ISO/IEC 42001 has become the world’s first AI management system standard. It provides structured governance for responsible AI deployment. The standard uses a Plan-Do-Check-Act methodology to help organizations monitor systems and adapt to new challenges.

Healthcare organizations must ensure their AI systems follow HIPAA by implementing unique user identification, emergency access procedures, automatic logoff functions, and complete audit controls.

How to Develop a Culture of AI Governance

Organizations need more than just policies to build an effective AI governance culture. Companies must create clear frameworks that define who does what across departments to develop AI successfully.

Cross-Functional Ownership

A diverse AI oversight committee is integral to effective governance. This committee brings together people from different parts of the organization. The committee should have leaders from IT, security, and legal teams, along with business representatives, HR, and sales executives, to ensure complete oversight.

The committee is responsible for:

- Spotting and understanding potential AI use cases

- Finding risks in proposed implementations

- Approving or declining use cases based on risk levels

- Making decisions about governance frameworks

Continuous Education

Organizations without the ability to share threat intelligence in real-time increasingly lag in decision-making capacity. An enterprise AI development company should foster education programs that help employees understand policy decisions, enabling them to better identify both risks and opportunities.

Professional certifications create structured learning paths. ISACA’s Advanced in AI Security Management certification gives professionals the tools to spot, assess, and reduce risks in enterprise AI solutions. IBM’s Generative AI for Cybersecurity Professionals program teaches basic security concepts for AI implementation.

Leading Healthcare Provider Reduces Costs by 75% with Trustworthy AI

Future Trends and Preparation

Global AI regulations are moving fast toward convergence. This creates both challenges and opportunities for organizations using advanced technologies. Different jurisdictions now understand AI’s cross-border nature, and international coordination on governance frameworks has become more visible.

Mutual recognition agreements between regulatory bodies have made compliance easier for multinational organizations. Companies no longer need to navigate completely different frameworks for each market. They can rely on core principles that stay consistent across jurisdictions. These agreements focus on risk-based frameworks, transparency requirements, and ethical principles that exceed cultural boundaries.

AI itself has become a powerful tool for boosting security. Enterprise AI development solutions now feature advanced anomaly detection capabilities that spot potential threats faster than human analysts. These systems learn continuously from new attack patterns and adapt defenses without manual intervention.

Organizations need strategic foresight to prepare for this changing landscape. They should create dedicated roles that focus on AI governance and regulatory monitoring. They should also implement modular AI architectures that allow flexible adaptation as requirements change in different markets.

Enterprise AI development companies can help shape emerging frameworks by partnering with regulators and public consultations. Future-centered organizations maintain internal standards that exceed current regulatory minimums because they anticipate stricter requirements over time.

Preparing for this future will require businesses to invest in workforce education. Their teams must understand both technical security aspects and evolving compliance requirements to develop truly resilient enterprise AI systems.

The Final Word

Organizations adopting AI technologies must keep security and compliance as their top priorities during development. AI systems offer benefits but also bring risks. These risks need systematic management.

Enterprise AI development services need to create a delicate balance between innovation and risk management. Organizations that integrate security and compliance considerations throughout their AI lifecycles will create more resilient, trustworthy systems. These systems will deliver lasting value while avoiding the devastating effects of security failures and compliance issues.