What if your everyday devices could react instantly without waiting? Edge AI has made this possible. It has changed how we compute by processing data locally on devices instead of sending it to cloud servers. This technology brings AI capabilities right to the device and solves challenges in areas where quick processing matters the most.

Edge AI computing differs from cloud-based AI systems. It delivers better speed and smoother performance. It also reduces delays and saves money. The technology lets users make instant decisions without requiring internet access or a central cloud. New advances in AI continue to drive its growth across various industries.

However, adding AI to edge devices has its challenges. These devices typically have limited power and memory. The shift from test environments to actual deployments is also hard. Organizations need to fix these issues to implement edge AI solutions successfully.

This blog talks about the essential features of edge AI and its real-world applications. It also explores how business leaders can deploy this technology by conquering common challenges. Let’s get started.

Table of Contents

What Is Edge AI?

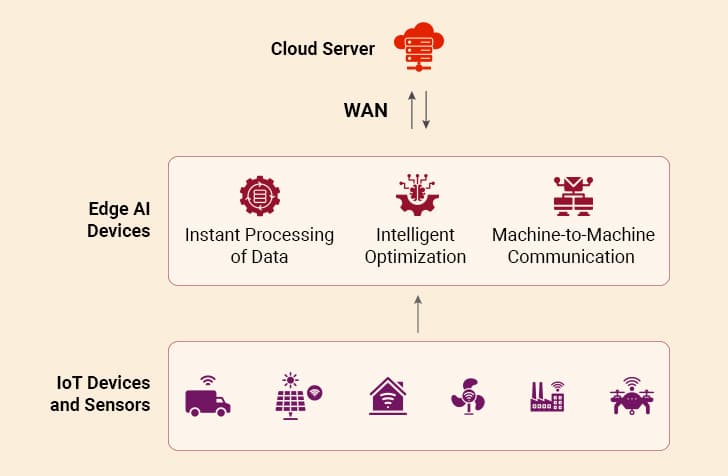

Edge AI marks a change in how artificial intelligence works within our technology ecosystem. This approach puts AI algorithms and models directly on local devices and sensors. As a result, data is analyzed close to where it is produced and not sent somewhere else for processing.

AI in edge computing is different from regular cloud-based AI systems. Traditional AI systems collect data from various sources and transmit it to the cloud infrastructure for processing. The results are then sent back to the client’s device.

This centralized system works perfectly for complex calculations. But sending data back and forth takes time. Edge AI handles information right where the device creates it. This eliminates the round-trip that data usually takes.

Edge AI has several key parts that work together:

- Data Collection Devices: Sensors gather raw data such as visuals, audio, or other inputs for processing.

- Edge Devices: These devices (e.g., smartphones, autonomous vehicles) have sufficient computing power to run AI models locally.

- AI Models: These specialized algorithms can run efficiently on edge hardware with limited resources.

- Communication Protocols: Efficient transfer and synchronization of data between edge and cloud environments is made possible with protocols such as REST APIs.

AI in edge computing involves many important steps that enable real-time processing:

- Data Collection: Edge devices gather raw data from sensors. This data acts as input for AI processing.

- Local Processing: AI models deployed on edge devices analyze this data in real-time. There is no need for external connections.

- Real-Time Action: The system takes immediate action based on the output of the AI model. Sometimes, it sends processed data to the cloud for advanced analysis.

Edge computing and AI help solve modern business challenges. Edge devices provide feedback and responses within milliseconds. The system works regardless of whether the internet is available or not. This makes it appropriate for remote areas that suffer from poor connectivity.

Why Does Edge AI Matter Today?

Modern companies are using AI in edge computing to overcome the issues that are common in traditional cloud-based systems. This approach helps them address four significant needs of present-day applications.

1. Low Latency

Edge devices process data within milliseconds. This creates real-time feedback that cloud-dependent systems cannot provide. Quick processing becomes essential for applications that require instant decisions.

Self-driving cars show why swift data processing matters. These machines must respond to factors like traffic signals, reckless driving, and lane changes in real-time. A vehicle’s quick decision-making ensures passenger safety and saves lives. AI at the edge removes the need for data traveling to far-off servers that once caused delays.

2. Bandwidth Efficiency

Older AI systems send massive amounts of raw data to cloud servers. This method makes a system inefficient over time. AI in edge computing fixes this by processing data locally and sending only key insights and decisions to central servers. This approach to data sharing saves substantial bandwidth. It also reduces operating costs.

Companies working in areas with limited internet find these savings valuable. Smart bandwidth also allows these businesses to implement advanced AI in places they couldn’t before. This opens up new possibilities.

3. Enhanced Privacy and Security

Privacy concerns increasingly influence technology choices as global data protection rules evolve. AI in edge computing keeps sensitive information localized. It does not send it across cloud networks where others might intercept it.

Local data processing reduces cybersecurity risks. It cuts down the chances of data mishandling and reinforces security. AI for edge computing also helps maintain compliance in industries subject to strict laws by processing and storing data locally. This explains why healthcare and financial services find this technology immensely useful.

How AI in Healthcare in Augmenting Medical Expertise

4. Offline Reliability

Edge AI does not depend on constant internet access, and this might be its biggest advantage. Cloud systems stop working without the internet, whereas AI in edge computing keeps running even offline. This strength matters for systems that work in areas with poor network connectivity.

Agricultural sensors, emergency response tools, and exploration gear benefit from this feature. Local processing keeps these systems running regardless of internet availability. It makes sure they work even in challenging environments. This reliability helps during network outages from natural disasters or infrastructure failures.

Table Summarizing the Key Features of Edge AI

| Advantage | Business Impact | Examples |

|---|---|---|

| Low Latency | Helps with instant decisions that are impossible to achieve with cloud-dependent systems. |

|

| Bandwidth Efficiency | Reduces data transmission volume. This saves bandwidth and lowers operating costs. |

|

| Improved Data Privacy and Security | Reduces cybersecurity risks. Eases compliance with data protection laws |

|

| Offline Reliability | Ensures smooth operation in areas with poor connectivity |

|

Which Critical Factors Determine Success in Edge AI Deployment?

“The true potential of edge really gets realized when an enterprise can efficiently deploy to the edge, operate, manage all these thousands of end points, etc., throughout the whole process of what they are doing, with a consistent framework.”

-Hillery Hunter, CTO, IBM Infrastructure

Organizations need to think about several key factors when they deploy AI at the network edge. These factors determine if their projects are likely to bring business value or fail.

I. Understanding Hardware Capabilities and Constraints

Edge devices do not work like regular data centers. They have less processing power, memory, and energy to work with. These limitations decide what AI models you can actually use.

Edge devices must work within strict power consumption limits.

This is especially true for battery-powered devices or those in remote places with restricted access to energy. So, you need to find the right balance between computing capability and power consumption. Teams must assess processing capabilities, memory limits, and power needs before picking up hardware components that fit their needs.

Space also creates challenges. Edge devices often need to fit within predetermined spaces or work in tough environments. They need protection from heat, moisture, and vibration. These requirements make hardware choices more complex.

Teams must keep these factors in mind when choosing hardware:

- Processing Power: How much computational power does your AI model need?

- Memory Requirements: Can the device store and run the model?

- Power Limits: Can the system operate within energy constraints?

- Environmental Needs: Can the hardware survive the deployment conditions?

- Size Restrictions: Does the solution fit the available physical space?

II. Optimizing AI Models for Edge Deployments

After sorting out the hardware, the next task is to make AI models work efficiently on edge devices. Regular AI models built for the cloud use too many resources. They need adjustments to run on edge devices.

Picking the right model is, therefore, crucial. Models like ShuffleNet, MobileNet, and YOLO give good results. These models don’t require massive computing power. They work well in places with limited resources. This makes them perfect for edge devices.

Several methods can be used to adjust AI models for edge networks:

- Quantization: It uses smaller numbers to store model data (changing from 32-bit to 8-bit or 4-bit). This reduces model size, while keeping its accuracy high.

- Pruning: This technique removes less important parameters. This makes models much simpler but just as accurate.

- Knowledge Distillation: This method takes knowledge from bigger models and puts it in smaller ones. There is little impact on accuracy.

Special frameworks help with these optimizations. PyTorch Mobile, TensorFlow Lite, and ONNX Runtime make edge deployment easier by simplifying technical complexities. This allows developers to focus on what their application needs.

III. Security and Privacy Considerations

AI for edge computing operates outside the boundaries of a conventional data center. Edge devices generally run in physically accessible locations that are devoid of protections, such as firewalls. This creates complex security issues. To ensure protection, teams must use strong measures that help avoid both physical tampering and remote attacks.

Teams can ensure data privacy using techniques such as:

- Differential Privacy: The technique adds calibrated noise to datasets. This prevents the identification of individual information while preserving statistical utility.

- Homomorphic Encryption: This enables computations on encrypted data without the need to decrypt it.

Communication between edge devices and cloud infrastructure creates another vulnerability. Protocols such as SSH and SSL/TLS help with the safe transmission of data. Strong authentication and access controls make sure only authorized users access sensitive information.

A zero-trust framework boosts existing security strategies. This approach validates every access attempt regardless of where it is coming from. This reduces the chances of unauthorized access.

Edge and cloud components require careful coordination. Rather than replacing cloud infrastructure entirely, edge computing and AI implementations create complementary architectures. Time-sensitive processing occurs locally while data flows regularly to cloud systems for long-term storage and analysis.

What Are the Real-World Applications of Edge AI?

Edge AI is moving from a theoretical concept to real-life applications that bring clear benefits. These applications show how device-level intelligence processing changes operations and opens new possibilities.

1. Manufacturing

AI for edge computing helps manufacturing companies predict faults through vibration and thermal sensors. These sensors spot problems before the equipment breaks down. This allows manufacturers to improve maintenance planning.

BMW’s facilities use cameras that provide a complete view of assembly lines for instant quality checks. AI algorithms check feeds from these cameras to find product defects. This considerably reduces the need for manual inspection. Many manufacturers also use visual analysis tools to find abnormal wear patterns on machine parts. This further improves maintenance.

2. Autonomous Vehicles and Drones

Self-driving vehicles process enormous amounts of sensor data from cameras and LiDAR systems. AI for edge computing handles this flood of information locally. This allows vehicles to detect obstacles and trigger emergency responses. The system identifies road signs, lane markings, and potential hazards without being dependent on cloud servers.

Drones also benefit from AI in edge computing, which processes sensor data directly onboard. This allows these drones to maintain proper orientation and dodge obstacles even when connectivity drops. This autonomy becomes important during emergency responses, where reliable communication cannot be guaranteed.

3. Retail and Logistics

Retail businesses use edge computing and AI to create smooth customer experiences. Edge solutions power automated checkout systems that recognize products without scanning barcodes. Smart shelves with sensors keep track of stock levels and alert staff when products run low. This helps stores predict demand more accurately.

Robots in warehouses use edge AI to direct themselves in complex spaces. This technology allows them to choose the best paths for collecting and moving products. There is no need for continuous access to the cloud.

4. Healthcare

Edge AI changes healthcare delivery in several ways. Wearable devices track important metrics (e.g., blood pressure, heart rate, and oxygen levels). They process this data locally to find unusual patterns and alert caregivers well in time.

Edge-powered endoscopy provides elaborate images through camera sensors for live analysis. This helps doctors perform surgical procedures accurately. Humanoid robots use neural networks to spot falls in the elderly population. These systems alert care teams instantly during an emergency while keeping a watch on the patient.

5. Smart Cities

Cities generate massive data streams that edge AI processes locally for immediate action. Traffic management systems analyze camera feeds and sensors to adjust signal timing and redirect traffic flow. This reduces congestion to a significant extent. Local processing avoids the latency and bandwidth constraints that make cloud-based traffic control impractical.

Table Summarizing Key Applications of Edge AI

| Application | Primary Benefit of Edge AI | Examples |

|---|---|---|

| Manufacturing | Reduces downtime and manual checks. Improves maintenance and quality |

|

| Autonomous Vehicles and Drones | Allows instant obstacle detection and safety responses. Works offline. |

|

| Retail and Logistics | Enhances customer experience. Helps maintain the right stock levels. |

|

| Healthcare | Helps with timely alerts and interventions. Improves accuracy |

|

| Smart Cities | Reduces congestion and emissions. Allows immediate responses. |

|

Why AI Development Demands Executive Vision

Which Future Trends Can We Expect in Edge AI?

AI in edge computing is seeing unprecedented advancements, and key technological developments will shape its future. Several trends have emerged that will define the next generation of intelligent edge systems.

The combination of 5G networks and edge computing can be transformative. With response times as quick as one millisecond, 5G’s ultra-low latency capabilities create perfect conditions for time-sensitive edge AI. It processes data directly at network edges, which eliminates bottlenecks and makes previously impossible applications a reality. The distributed architecture of 5G fits edge processing needs, and both technologies enhance each other through shared infrastructure and operational models.

The development of specialized AI chips has also sped up. Traditional processors show significant limitations for edge deployments. This has led to the creation of purpose-built AI accelerators that maintain a balance between performance and power efficiency. These specialized chips come with neural processing units designed specifically for AI workloads that dramatically improve inference speed while using less energy.

Older edge AI implementations bring interoperability problems due to their fragmented nature. Major technology providers are now establishing common frameworks and protocols to fix this constraint. The Edge Computing Consortium and Open Edge Computing Initiative are developing reference architectures and interoperability standards. Their efforts will help create unified platforms that simplify development and deployment in a variety of environments.

AI for edge computing will focus more on autonomous operation capabilities. Soon, we’ll have self-optimizing networks that adapt to changing conditions without human intervention. The result will be truly intelligent edge ecosystems that progress with the environments they monitor and control.

Conclusion

Edge AI changes how intelligence works in our connected world. Companies need well-planned strategies to implement the technology successfully within their environment. Teams of hardware specialists, AI developers, security experts, and business stakeholders must work together to build solutions that deliver results.

Today, computing intelligence is moving to the edge. The opportunities are abundant for those who approach edge AI deployment with the right strategy and realistic expectations.