Cloud computing architectures built for yesterday’s needs are crumbling under today’s demands. The systems that once powered businesses efficiently now face many challenges: artificial intelligence requires smarter infrastructure, environmental concerns demand greener designs, and multi-cloud setups demand interoperability.

Organizations are shifting strategies in response. Some are adopting hybrid and multi-cloud models to avoid vendor lock-in. Others are using AI-driven automation to manage and scale cloud resources. This isn’t just an upgrade—it’s a complete architectural revolution. The future of cloud architecture hinges on balancing computational power with sustainability, flexibility, and cross-platform collaboration. This will reshape everything from data centers to software stacks.

This article explains how organizations can design smart cloud architectures that balance three crucial elements: powerful AI capabilities, environmental responsibility, and seamless cross-platform integration.

Table of Contents

Cloud Architecture for AI Workloads

Designing for Sustainability in Cloud Architecture

Interoperability and Multi-Cloud Native Design

Future-Forward Components of Cloud Computing

Cloud Architecture for AI Workloads

AI workloads are completely reshaping cloud architecture. Unlike traditional applications, AI-based systems need a specialized cloud infrastructure that provides massive computational power. Organizations must understand these requirements to adapt to evolving demands. Here’s what makes AI workloads unique:

Specialized Acceleration Requirements

AI workloads are different from regular computing tasks. They need special accelerators to handle complex matrix calculations.

GPUs (graphics processing units) and TPUs (tensor processing units) are specifically built to tackle these calculations. These offer several performance advantages compared to regular CPUs.

TPUs developed by Google are optimized for deep learning and neural networks. These TPUs have Tensor Cores that excel at high-volume mathematical processing. GPUs work differently, using parallel processing across multiple cores to speed up AI-ML tasks.

By integrating these accelerators into the cloud architecture, organizations can improve computational throughput and save power.

MLOps: A New Paradigm for Operations

MLOps (Machine Learning Operations) involves practices that simplify the entire machine learning lifecycle. Though MLOps improves efficiency, it brings new challenges that traditional components of the cloud were not built to handle.

MLOps requires cloud architectures that support:

- Experimentation: Teams must be able to try various ML algorithms to see what works best. Cloud architectures should support such experimentation while enabling code reusability.

- Complex Testing Requirements: ML workloads have complex testing needs (e.g., data validation, model validation). The cloud should facilitate this testing.

- Automated Deployment Pipelines: ML systems need multi-step pipelines to retrain and deploy automatically.

- Continuous Monitoring: Models can degrade over time as data profiles change. Teams must be able to track their performance in real-time.

Modern cloud computing platforms use MLOps tools to make ML workflows run smoothly. To cite an example, Vertex AI MLOps helps teams collaborate on AI-ML projects. It also helps improve the performance of models through monitoring and alerts.

Hardware acceleration and MLOps are driving a big change in cloud system architecture. Organizations must adapt their cloud environments as AI becomes central to their business operations. These environments must provide massive computing power and solid operational frameworks to help with successful AI implementation.

Decoding Cloud Managed Services for Business Success

Designing for Sustainability in Cloud Architecture

Sustainability isn’t just a nice-to-have feature anymore—it’s non-negotiable as more companies move to the cloud. Today, cloud computing releases more greenhouse gases than the commercial aviation industry. Cloud architects can’t ignore this reality: they must weave environmental responsibility into every aspect of their designs.

1. Why Sustainability Is an Essential Design Principle Now

Organizations are paying attention to sustainability when planning their cloud computing architecture. There are several reasons behind their doing so:

- Regulatory Compliance: Governments worldwide now make companies track and reduce their emissions.

- Financial Benefits: Energy-efficient architecture cuts both emissions and operational costs. This directly boosts the bottom line.

- Brand Reputation: Green practices allow organizations to build a better brand image. This helps attract partners and clients who care about environmental impact.

2. Sustainable Infrastructure: ARM Chips, Carbon-Aware Computing, and Green Data Centers

Cloud architecture for AI now uses hardware built specifically for energy efficiency. ARM-based processors are integral to sustainable cloud computing. These deliver more performance per watt than traditional x86 processors. Their simpler architecture makes them suitable for AI workloads where energy efficiency is non-negotiable.

Carbon-aware computing brings another breakthrough in green cloud infrastructure. This smart system runs time-insensitive tasks (e.g., AI training or batch processing) when the electricity is greener. By moving heavy workloads to low-carbon periods, companies can cut emissions significantly.

Green data centers provide the foundation for sustainable cloud computing. These facilities focus on minimizing environmental impact at every level through:

- Renewable energy from on-site sources (solar, wind) or purchase agreements

- Advanced liquid cooling systems that cut energy usage

- Waste management and hardware recycling programs

- Energy-efficient equipment design

Additionally, companies can cut emissions by picking cloud regions that produce less carbon. For example, Google shows carbon data for all its regions. It offers a Google Cloud Region Picker tool to balance emissions cuts with pricing and network speed.

3. Monitoring and Optimizing Carbon Footprint Through Cloud Observability Tooling

Cloud computing architecture requires proper tracking of carbon footprints. Several cloud providers offer advanced solutions for this purpose:

- Google Cloud Carbon Footprint: Allows tracking of emissions by project, product, and region. Emission data can also be exported to BigQuery for deeper analysis.

- Customer Carbon Footprint Tool: This dashboard estimates the carbon emissions pertaining to AWS workloads.

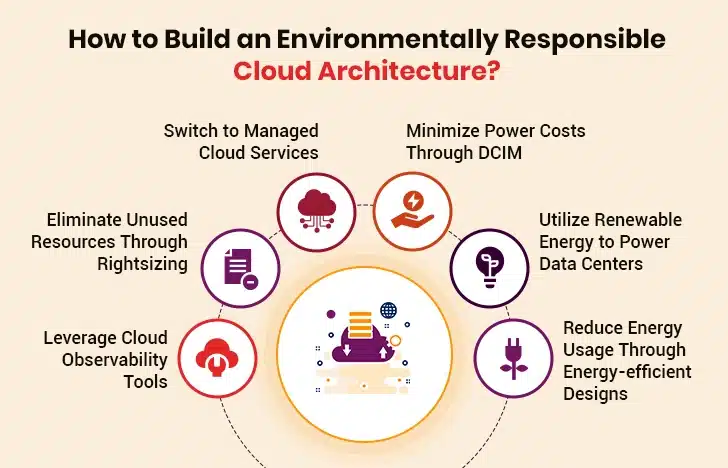

In addition to using monitoring tools, businesses can use the following methods to boost sustainability:

- Removing unused resources through rightsizing and consolidation

- Leveraging managed cloud services to optimize resource usage

- Using automated Data Center Infrastructure Management (DCIM) systems to cut power costs while reducing carbon emissions

Interoperability and Multi-Cloud Native Design

Relying on just one cloud provider can lead to business and technical risks. Multi-cloud architecture has, therefore, become critical for organizations that want flexibility and resilience.

1. Avoiding Vendor Lock-in Via Open Standards

Companies experience vendor lock-in when they rely too heavily on one cloud provider. Vendor lock-in creates several risks: a drop in service quality, offerings that no longer meet needs, or unexpected spikes in prices.

Open standards fix these problems. Kubernetes leads the pack as the top open-source platform for cloud computing architecture. It automates deployment, scaling, and operation of containerized applications. This lets teams run everything consistently across environments. They can move workloads between environments without major rewrites.

OpenStack provides similar benefits. As an open-source platform delivering infrastructure-as-a-service, it manages pools of compute, storage, and networking resources throughout data centers. This standardization lets you spread workloads across providers without getting tied to proprietary systems.

2. Unified APIs and Service Meshes for Seamless Integration

Unified APIs are the building blocks of cloud computing that make true interoperability possible. They create abstraction layers that hide the complexities of different cloud platforms. The result? Teams can build applications that run across multiple environments without the need for separate code for each provider.

Service meshes take this integration further. These provide infrastructure layers for handling how services in a distributed application talk to each other. This reduces the complexity of inter-service interactions. This way, developers can focus on high-value work while ensuring consistent security, monitoring, and traffic management across environments—on-premises, hybrid, or multi-cloud.

Organizations using these technologies gain many benefits:

- Simpler operations through central management

- Better reliability through distributed systems

- More flexibility to pick the best services from different providers

- Lower costs by using competitive pricing across vendors

3. Role of Cross-Cloud Orchestration Platforms

Cross-cloud orchestration platforms have changed how teams manage multi-cloud environments. Platforms like Terraform automate the provisioning and management of cloud resources (e.g., servers, databases, storage) across multiple providers. It eliminates the need to manually configure each cloud service.

Crossplane builds on this by managing cloud infrastructure through Kubernetes-native APIs. Organizations can seamlessly deploy and manage resources across providers using this open-source tool. This makes it easier to mix and match cloud services. It also reduces vendor lock-in risks.

As hybrid and multi-cloud setups become the norm, these technologies collectively build the foundation for interoperable cloud computing. This means teams can pick best-of-breed services while maintaining coherence and eliminating the pitfalls of distributed architectures.

Future-Forward Components of Cloud Computing

Architecture in cloud computing has evolved dramatically. Today, three distinct yet connected components form the backbone of tomorrow’s computing environments. Let’s look at what makes modern cloud systems different from their predecessors.

1. Intelligent Orchestration Layers (AIOps, FinOps, GreenOps)

Remember when cloud management meant manually tweaking settings and hoping for the best? Those days are now gone. Intelligent orchestration layers represent a shift in how we manage, optimize, and monitor cloud resources.

AIOps uses machine learning and analytics on operational data to spot anomalies and predict issues before they hurt performance. This hands-on approach cuts down problem-solving time. It also boosts reliability in multi-cloud environments.

FinOps and GreenOps work together as complementary frameworks to optimize different parts of cloud operations. FinOps maximizes financial efficiency while GreenOps focuses on reducing environmental impact through better resource use.

Approaches like GreenOps have proven more effective than cost savings alone in driving cloud architects to design efficient systems. This shift matters a lot for organizations trying to balance daily operations with their ESG goals.

2. Secure-By-Design Frameworks

Smart cloud system architectures treat security as a fundamental design requirement, not just another feature to bolt on later. Two approaches stand out in modern cloud security: confidential computing and zero trust architecture.

Confidential computing protects data while it’s being used. Today’s advanced chipsets run workloads in encrypted memory. This ensures that even users with high-level privileges can’t access sensitive information being processed. This significantly reduces the attack surface.

Zero Trust architecture works along with confidential computing by assuming nothing inside or outside networks can be trusted automatically. It verifies every single access request regardless of where it comes from. When used alongside confidential computing, this approach creates minimal, clearly defined trust relationships that substantially improve security.

3. Event-Driven Microservices Over Monolithic Setups

Cloud architecture is moving away from monolithic designs toward event-driven microservices. Unlike monoliths, where components share data within the same codebase, microservices talk to each other through APIs to handle specific business functions.

Event-driven architectures (EDA) mark another step forward. In EDA, components talk primarily through events, creating systems where:

- Components stay loosely coupled with minimal change impact

- Each component scales on its own, improving resource usage

- One component’s failure doesn’t take down the whole system

The decoupled nature of event-driven microservices also makes them perfect for sustainable cloud computing. They use energy more efficiently than monolithic approaches because resources scale independently based on need. There is no need to scale the entire application.

These three components—intelligent orchestration layers, secure-by-design frameworks, and event-driven microservices—change how organizations design, deploy, and manage resources for peak efficiency, security, and flexibility in the cloud.

The AWS Architect’s Playbook: Best Practices for Cloud-Native Design

Strategic Considerations for IT Leaders

IT leaders stand at a crossroads. Cloud architecture requires them to think beyond technical specifications and features. To get the most from cloud investments, they need to balance innovation with sustainable practices and cross-team collaboration.

1. Questions to Ask While Rearchitecting Cloud Systems

IT executives who plan changes to cloud system architectures should consider several key questions:

- How will our architecture support both current and future AI workloads?

- What metrics will we use to measure carbon efficiency and performance?

- How can we design the cloud for interoperability while maximizing provider-specific advantages?

- How does our architecture enable (and not hinder) innovation?

- How does our new cloud strategy align with broader organizational sustainability goals?

2. ESG Scorecards for IT

Environmental, Social, and Governance (ESG) scorecards have become vital aspects of cloud implementations. Progressive IT leaders use comprehensive scorecards that track:

- Carbon intensity per compute unit

- Resource utilization efficiency

- E-waste reduction through hardware lifecycle management

- Alignment with organizational diversity and inclusion initiatives

- Compliance with emerging regulatory frameworks

These scorecards turn abstract sustainability goals into concrete, measurable metrics that show IT’s contribution to organizational ESG objectives. Regular reporting creates accountability and spots areas for improvement in sustainable cloud computing.

3. Cooperation Among Teams: DevSecOps Meets MLOps Meets GreenOps

Different operational disciplines should be integrated for managing a multi-cloud architecture effectively. Teams that once operated independently now need to work in close collaboration.

DevSecOps teams bring in security-first development practices. MLOps specialists bring model training and deployment expertise, while GreenOps professionals keep environmental impact central to decision-making. This integrated approach removes organizational silos that often slow down innovation.

IT leaders should endeavor to build truly interoperable cloud systems by thinking strategically about these aspects. These systems balance performance, sustainability, and security while supporting their organization’s broader goals.

Conclusion: Architecting for What’s Next

Cloud architecture sits at a crucial turning point. Today, AI workloads, sustainability goals, and the need for interoperable systems are reshaping modern cloud computing environments. Smart organizations must tackle these priorities together rather than treating them as separate boxes to check.

Building a cloud architecture in 2025 calls for a holistic approach where technical decisions support broader organizational goals. IT leaders who blend across previously isolated domains—DevSecOps, MLOps, and GreenOps—while focusing on strategic questions will create cloud environments that drive innovation, minimize environmental impact, and maximize agility. These balanced foundations will likely determine which companies lead their industries in the next decade and beyond.