What separates billion-dollar AI companies from those burning cash in failed implementations? They’ve cracked the code for building production-ready MLOps pipelines. Azure provides the foundation for MLOps, but success requires more than cloud resources. It demands a strategic approach to automation, monitoring, and governance that many organizations completely overlook in their rush to deploy. In this detailed piece, we will show you the tools you need, the best ways to work, and the common problems you might face. By the end, you’ll know how to build AI/ML systems that work reliably for your business every single day.

Table of Contents

MLOps: The CTO’s Secret Weapon for Enterprise Success

Key Components of MLOps on Azure

Best Practices for Building a Robust MLOps Pipeline on Azure

Building a Robust MLOps Pipeline on Azure: Common Challenges and Solutions

MLOps: The CTO’s Secret Weapon for Enterprise Success

MLOps helps companies move from testing machine learning ideas to using them in real-world applications smoothly. Without MLOps, ML projects often stay stuck in the testing phase and never reach customers. MLOps makes sure ML models work well, scale easily, and deliver results without errors. It also brings several key benefits to businesses, including better control, faster results, stable performance, and more. Most importantly, it prevents failures, so AI/ML systems run without disruptions. Moreover, the chief technology officer plays a key role in making sure MLOps fits into the company’s bigger digital transformation plans. By bringing together tech teams and business leaders, they ensure ML projects support growth and innovation.

Key Components of MLOps on Azure

Azure offers helpful tools for creating strong MLOps pipelines. These components work together to handle data, train ML models, and keep everything running smoothly from start to finish. This way, your machine learning models keep improving without extra headaches.

I. Azure Machine Learning

Azure Machine Learning is a cloud platform that helps teams build, train, and deploy machine learning models quickly. The platform allows users to create pipelines that can be reused and repeated, making it easier to manage many experiments. It also allows teams to work together on the same projects without worrying about version control or file sharing issues. Azure Machine Learning provides monitoring tools that track how well the ML models perform after deployment. This makes it easier to spot problems and fix them quickly before they affect business operations.

| Pros | Cons |

|---|---|

| Supports full ML lifecycle | Can be complex for beginners |

| Handles technical setup automatically for you | Learning curve is steep for beginners |

| Integrates with CI/CD pipelines | Some features need coding knowledge |

| Supports team collaboration on shared projects | Risk of relying solely on Microsoft |

| Easy to use with a simple interface | UI can be confusing at first |

II. MLflow

MLflow is a tool that helps track and manage ML experiments. It records parameters, code versions, and results for each model run, making it easier to compare and reproduce experiments. MLflow also helps package models so they can be deployed in different environments. It is simple to use and supports many programming languages and ML libraries. MLflow works well with Azure Machine Learning and other platforms, helping users keep their ML projects organized and consistent.

| Pros | Cons |

|---|---|

| Easy to start using with minimal setup required | Needs manual integration with other services |

| Free and open-source with active community support | Limited support for large-scale workflows |

| Simple model deployment to various platforms | Limited collaboration features for large teams |

| Helps organize and compare different model versions | Basic user interface might feel outdated |

| Tracks experiments efficiently | Requires additional tools for full MLOps |

III. Kubeflow

Kubeflow is a free platform designed to help run ML workflows on Kubernetes. It organizes the steps of ML projects into pipelines that can be repeated and scaled easily. Kubeflow supports running training jobs, tuning models, and deploying them as services. It works well with cloud environments and allows teams to manage resources efficiently. The platform is flexible and supports many ML tools, but it requires some knowledge of Kubernetes to set up and maintain. Kubeflow helps make ML projects more organized and easier to manage at scale, especially when working with many models or large data.

| Pros | Cons |

|---|---|

| Free to use since it is open-source software | Requires deep technical knowledge to set up |

| Supports multiple programming languages and frameworks | Troubleshooting issues can be difficult |

| Provides good resource sharing across teams | Needs Kubernetes expertise to manage properly |

| Integrates with many ML tools | Requires more infrastructure effort |

| Scales easily with cloud resources | Documentation can be confusing for beginners |

IV. Azure Databricks

Azure Databricks combines data processing and machine learning capabilities in one powerful cloud platform that handles big data efficiently. Built on Apache Spark, Azure Databricks processes massive amounts of information much faster than traditional methods since it uses distributed computing across multiple servers. It also supports many machine learning libraries and tools that speed up model development and testing. The platform also provides collaboration features that let team members share code, results, and insights easily across different departments.

| Pros | Cons |

|---|---|

| Integrates with Azure ML and other tools | Setup can be complex for new users |

| Fast data processing with Apache Spark | Requires learning Apache Spark for best use |

| Scalable for big data projects | Can be costly for small projects |

| Automatically scales computing power based on needs | May have performance issues with certain data types |

| Automated resource management | Can be costly for small projects |

V. DVC (Data Version Control)

DVC is a tool that helps teams keep track of changes in data and machine learning models, just like how code is tracked in Git. It stores large files, like datasets and models, outside the main code repository, which keeps the project light and fast. DVC also manages the steps in a machine learning workflow, making sure that when data or code changes, only the needed parts are updated. This helps teams easily switch between versions, compare results, and share data without copying large files unnecessarily.

| Pros | Cons |

|---|---|

| Automates pipeline stages | Limited UI, mostly command-line |

| Works with existing development tools and workflows | Setup can be tricky for beginners |

| Prevents data loss and accidental overwrites | Requires additional cloud storage which costs money |

| Helps teams share large datasets efficiently | Learning curve is steep for non-technical users |

| Supports easy collaboration | Fewer built-in features for collaboration |

VI. Azure Kubernetes Services

Azure Kubernetes Services manages containerized applications and machine learning workloads across multiple servers automatically. This service takes care of complex infrastructure management tasks, so you can focus on building your machine learning models instead. It automatically scales your applications up or down based on current demand, which helps control costs effectively. The platform handles server maintenance, security updates, and system monitoring without requiring manual intervention. Azure Kubernetes Services works well with various ML frameworks and tools that data scientists use. It provides reliable hosting for your deployed models and ensures they stay available even when individual servers fail.

“Azure Kubernetes Service (AKS) is a game-changer for deploying scalable ML models in production.” – Arpan Shah, Former Director of Azure AI, Microsoft

| Pros | Cons |

|---|---|

| Good for large, busy applications | Setup and configuration can be time-consuming initially |

| Supports rolling updates without downtime | Troubleshooting issues requires advanced technical knowledge |

| Provides reliability and high availability for deployed models | Can be expensive for small projects |

| Includes built-in security and networking features | Monitoring requires additional tools |

| Works with other Azure services | Requires learning Kubernetes concepts which can be difficult |

VII. Azure DevOps

Azure DevOps provides a complete set of tools for managing software development projects from start to finish including machine learning pipelines. The platform helps teams plan their work, write code, test applications, and deploy finished products all in one organized system. Azure DevOps connects smoothly with many third-party tools and other Microsoft services that development teams commonly use. It provides secure storage for your code and maintains a detailed history of all changes made by different team members.

| Pros | Cons |

|---|---|

| Provides strong security and access control features | Can be tricky to set up for new users |

| Supports automated testing and deployment processes | Requires some scripting knowledge |

| Offers detailed tracking and reporting capabilities | May have too many features that teams never actually use |

| Good for both small and big projects | Can be overwhelming for small simple projects |

| Enables continuous integration and delivery | Costs can grow with bigger teams |

Azure Cost Management and Optimization for Cloud Efficiency

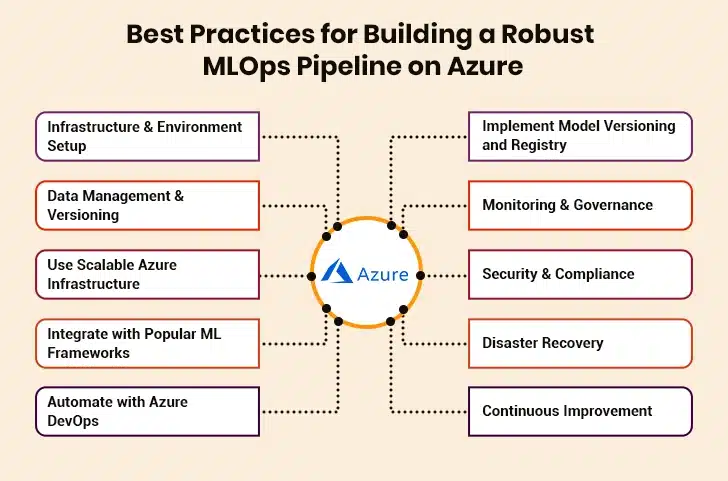

Best Practices for Building a Robust MLOps Pipeline on Azure

Creating a reliable MLOps pipeline on Azure takes careful planning. These proven methods help teams build systems that work smoothly from start to finish, making AI projects more successful.

1. Infrastructure & Environment Setup

Start by creating the right foundation for your machine learning pipeline on Azure. Set up separate spaces for developing, testing, and running final ML models. Make sure all team members can access what they need. You should also choose computing power that matches your project’s requirements. Also, prepare storage for your data and models. A good setup from the beginning prevents problems later. Always ensure that everything works smoothly together while allowing room to grow as needs change.

2. Data Management & Versioning

Managing your data well means keeping track of all the information you use for your ML projects. Every time you make changes to existing data, you need to record what changed and when it happened. You also need to set up automatic checks that look for missing information that might cause problems later.

3. Use Scalable Azure Infrastructure

With the scalable infrastructure of Azure, your system can handle different amounts of work without breaking down. When you have lots of data to process, Azure can automatically add more computing power to handle the extra load. During quiet periods, Azure reduces the resources and lowers your costs. This lets you only pay for what you actually use.

4. Integrate with Popular ML Frameworks

Connect your Azure setup with common ML tools that data scientists use. The integration should feel natural rather than forced. Ensure data flows smoothly between different parts of the system. This helps team members work efficiently without learning completely novel methods.

5. Automate with Azure DevOps

Automation with Azure DevOps means setting up systems that handle repetitive tasks without human intervention. When your team makes changes to ML code, the system automatically tests everything to make sure nothing breaks. If the tests pass, your changes get moved to production without manual work. This automation speeds up the process of getting improvements to users.

6. Implement Model Versioning and Registry

Model versioning means maintaining track of different versions of your ML models. Each time you improve your model, you save it with a new version number in a central storage place called a registry. This system helps you compare how well different versions perform and quickly switch back to older versions if new ones are causing problems.

7. Monitoring & Governance

Monitoring means keeping tabs on your ML model performance after they go live with real users. You need to check if they are giving correct results and responding fast enough. Governance involves setting rules about who can tweak your ML models and ensuring everyone sticks to proper procedures. This combination keeps your systems running while maintaining quality standards across your organization.

8. Security & Compliance

Keep your ML pipeline safe by setting proper access controls. Allow only approved people to work with sensitive data and models. Also encrypt information when storing or moving it between systems. You must follow industry rules about data protection. Furthermore, regularly check for potential security weaknesses. This careful approach builds trust while preventing problems that could stop your work. Security should be part of every step, not added later.

9. Disaster Recovery

Prepare for unexpected problems before they happen by setting up backups for important data and models. Create plans to restore operations if something fails. You may also distribute your work across multiple servers to avoid single points of failure. The system should keep running even if one part has issues. Furthermore, test recovery procedures regularly to ensure that they work. This preparation means your pipeline stays reliable when you need it most.

10. Continuous Improvement

Regularly check how your pipeline performs and look for ways to improve it. Update models as new data becomes available and refine processes based on what you learn from past projects. You may also encourage team members to share ideas for enhancements. Small and frequent improvements work better than rare big changes. Furthermore, track metrics that show what’s working well and what needs attention. This ongoing care keeps your pipeline useful as needs evolve over time.

The Definitive Guide to Mastering Azure Cloud Migration

Building a Robust MLOps Pipeline on Azure: Common Challenges and Solutions

Building MLOps pipelines can be tricky. The below challenges and solutions help you avoid common mistakes and build pipelines that keep ML models working well.

I. Managing Complex Data

When working with machine learning projects on Azure, handling data becomes quite tricky. Different teams often store their data in various formats and locations across the cloud platform. The information also comes from multiple sources and keeps changing over time. This creates confusion about which version of data to use for training ML models. Furthermore, teams often struggle to find the right data quickly when they need it.

Solution: The best way to handle this mess is by setting up a clear data management system. Azure provides tools that help organize all data in one central place. Teams can also create rules about how data should be stored and labeled. Remember, setting up automatic checks helps catch data problems early before they cause bigger issues.

II. Deploying Models Smoothly

Getting machine learning models from testing environments to real-world use on Azure often hits roadblocks. The process involves many steps that can break easily if not handled properly. ML models that work perfectly during development sometimes fail when moved to production systems. Moreover, different environments have different settings and requirements, which creates compatibility issues. Testing thoroughly also becomes difficult since there are so many moving parts involved.

Solution: Creating automated deployment pipelines solves most of these headaches. Azure DevOps tools can handle the entire process without human intervention. Furthermore, setting up proper testing stages ensures models work correctly before going live. Using containers helps maintain consistency across different environments.

III. Treating MLOps Like Legacy DevOps

Some enterprises try to use old software development methods for machine learning projects on Azure, which causes major headaches. Traditional software development focuses on code, but machine learning also involves data and models that behave differently. Furthermore, regular software testing methods do not work well for checking model predictions. As a result, teams end up frustrated since their usual processes fail to handle the unique challenges of machine learning workflows.

Solution: MLOps requires specialized approaches that handle data, models, and code together. Azure ML provides tools for model versioning, data validation, and automated retraining. Moreover, creating separate pipelines for data processing, model training, and deployment ensures better results than forcing traditional methods.

IV. Lack of Cross-Functional Collaboration

Machine learning projects on Azure often fail because different teams work in isolation. For instance, data scientists build models without understanding real business needs or technical constraints from engineers. Engineers deploy systems without knowing how models actually work or what they need to perform well. Similarly, business teams set unrealistic expectations since they do not understand technical limitations. This disconnect leads to models that work in production labs but fail in real business situations.

Solution: Creating diverse teams with data scientists, engineers, and business leaders working together from the start prevents common collaboration challenges. Azure DevOps boards also help track progress and share updates across all team members. Furthermore, regular meetings ensure everyone stays aligned on goals and requirements.

V. Overcomplicating Tech Stack in the Initial Stage

Many teams get excited about new technologies and try to use too many advanced tools when starting their first MLOps project on Azure. They choose complex solutions before understanding their actual needs, which creates unnecessary confusion and delays. Learning multiple new technologies at once overwhelms team members and slows down progress significantly. Not to mention, simple problems take much longer to solve because of the added complexity that brings no real benefits.

Solution: Start with basic Azure ML services and add complexity gradually as needs become clear. Focus on getting one complete pipeline working first before adding advanced features. This approach helps teams learn effectively. It also builds confidence before tackling more complex requirements.

Drive Business Impact with Damco’s Expertise

Building a strong MLOps pipeline on Azure requires careful planning and the right combination of tools working together smoothly. In this insightful post, we’ve explored various Azure tools that help manage machine learning projects from start to finish. We have also covered important best practices to keep your ML projects organized and running efficiently. The common challenges we discussed show that creating these pipelines takes time and effort, but the benefits make it worthwhile for most businesses.

Remember that choosing the right tools depends on your specific needs and budget constraints. Start with simple setups and gradually add more features as your team becomes more comfortable with the process. With patience and consistent effort, your organization can build reliable machine learning systems that deliver real value to your customers and business operations. In case you need expert help, you may seek consultation from a reliable AI/ML expert like Damco that has delivered 1000+ products, applications, and solutions.